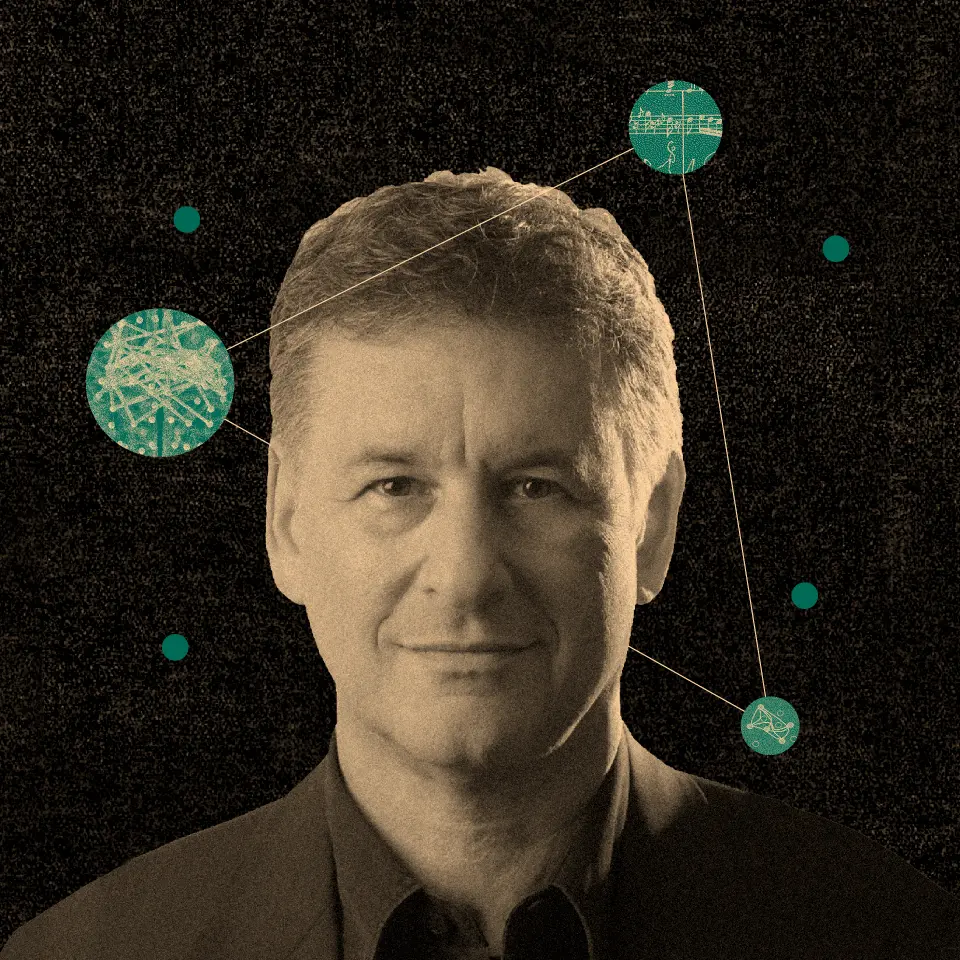

After growing up with the sounds of Cheyenne, software engineer Michael Running Wolf is now harnessing AI to ensure this language's survival, and others.

Indigenous languages are facing a steep decline: 90% are at risk of not being passed on to younger generations, while 70% are spoken by only a handful of individuals, predominantly elders.

"Essentially, we're racing against time. Within five to 10 years, we risk losing a significant part of the cultural and linguistic heritage in the United States," explains Michael Running Wolf, a software engineer with roots in the Cheyenne community.

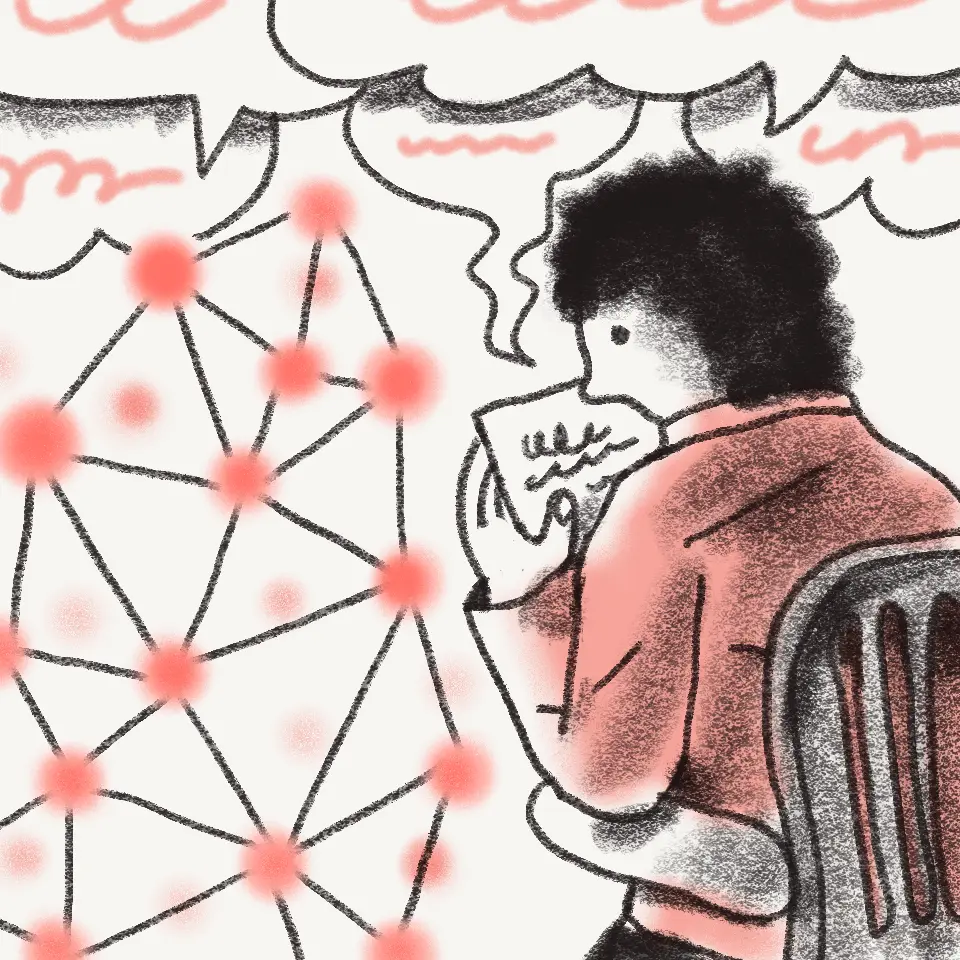

Running Wolf is one of a small but growing number of researchers who believe AI has the potential to safeguard endangered languages by simplifying the learning and practice process for speakers. As a co-founder of the First Languages AI Reality (FLAIR) Initiative at Mila Artificial Intelligence Institute, he is at the forefront of efforts to update the way indigenous languages are taught and preserved. “The ideal outcome is that we reverse this pendulum of language loss,” says Running Wolf.

We discussed his Cheyenne roots and how his work experience as an engineer in AI speech recognition blossomed into a bigger calling.

What was your upbringing like?

I grew up in a rural village called Birney, Montana. It was the traditional home of my mother's family, the Northern Cheyenne. There were about a dozen houses—the village was originally just my mother’s extended family. I grew up with my cousins and didn’t really meet an outsider until I went to college, honestly. We kept the traditions of the Cheyenne, and I participated in them from when I was a young child.

Did you actively speak the Cheyenne language?

I understood most of it. But it wasn't something that was taught intentionally. It was a sore spot—for a long time Cheyenne was restricted institutionally. My mother managed to avoid the school system because her grandparents would hide them in the hills to avoid the government taking the children and putting them in boarding school. So you can see how speaking openly in Cheyenne could become a liability. But I grew up listening to it.

"The ideal outcome is that we reverse this pendulum of language loss."

So family had an outsized influence on your education.

My mother—they could write books about her. She used to be an engineer at Hewlett-Packard, and spent time as a surfer girl, and lived in Cologne at one time. She taught me to read when I was a toddler, and taught me math using the slide rule. She also wanted her children to have a connection to our family and our traditions. She encouraged us to be indigenous.

How did you get interested in linguistics and technology?

My grandfather spoke several languages: Cheyenne, Arapaho, Crow, and Lakota. I was always very proud of that. That used to be the norm for the Cheyenne—how we survived was being able to negotiate. So when I went to college I started thinking about language a lot, and how we might use modern technology to secure our future and culture. I think it's critical that these technologies are compatible with indigenous languages, not only from a technical perspective, but also our ways of knowing.

How did working as an engineer on Amazon Alexa influence where you’re at now?

I joined in 2017, working on the privacy team for Alexa. My team was tasked with solving really big problems for millions of users—it was some of the most fun I've ever had. We were charged with implementing compliance with all these different international privacy regimes. I was one of maybe 12 engineers reading the entire code base, because we needed to have a really strong grasp of how Alexa worked. As I became more and more familiar with it, I begin to think, “What if we could do this for indigenous languages?”

"I started thinking about how we might use tech to secure our future and culture."

What did you find out?

As part of my day to day, I would talk to some of the brightest minds in automatic speech recognition—so I asked them. I discovered that it's a technical challenge, given the differences between English and French and German versus indigenous languages, like the Lakota or Cheyenne. I took internal training on machine learning, and began to really fundamentally understand that we needed to solve some key technical challenges. And I thought I would best pursue that in academia, so I left Amazon. That's why I've been doing this research, which is currently housed at Mila [the world’s largest academic center for deep learning] in Montreal.

What area is your research currently focused on?

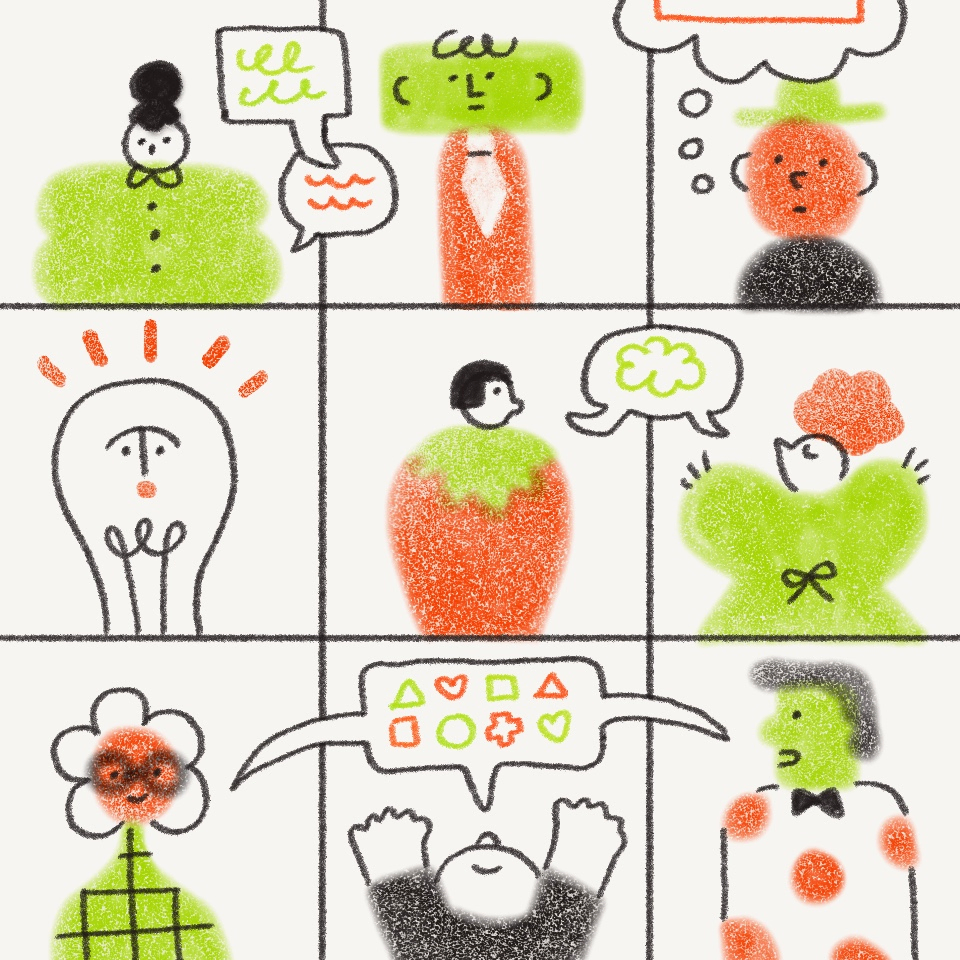

What we're doing right now is research and methodology to create automatic speech recognition for very low-resource indigenous languages in North America. In that process, I’m working on solving a lack of data, and the lack of compatibility between current AI methodologies and the morphology [the ways that words are formed] of North American languages, and trying to do it in a way that is ethical, earns the trust of indigenous communities, and hopefully inspires others. I don't want to be the figurehead of indigenous AI. I need peers. I want communities becoming exemplars of what should be happening.

About those technical challenges—what makes many indigenous languages fundamentally different than English?

English has a finite distinct dictionary. In polysynthetic languages [such as Cheyenne], we have an infinite amount of words, and each word can convey as much information as an English phrase.

Let’s take the simple example of a red car. I wouldn’t simply say “the red car.” In one word with three morphemes, one per word, and depending on the language, I’d say, "It’s your car, you are an acquaintance, you’re really far from me, and maybe that you’re to the west of me." You bend all of that highly contextual information into a word that means "red car." The dynamism here is such that a word may never occur more than once in a lifetime.

But how do you gather a data set of infinite words?

These are human languages. So there’s a finite set of morphemes. It’s doable for AI to speak indigenous languages—it's just never been done.

"I don't want to be the figurehead of indigenous AI. I need peers."

I understand that you’re also dealing with unique privacy and ownership issues.

If you look at the archival audio that we're working with, much of it are sacred stories that have been transcribed. There's an intrinsic sensitivity into these stories that we need to honor. So a key component of our research is making sure that we really earned the community's trust and keep it. Our goal isn't to build the data set of audio that we could leverage for monetary gain. Our goal is to build a methodology and technology that can scale broadly.

We also have a technical challenge: We don't have enough speakers or enough audio to rival a Siri or Google Assistant. So what we need to do is collect multiple tribes together who have similar phonetics and similar morphology, and amplify our datasets. That requires a lot of coordination and trust.

What do you imagine building?

The majority of many tribes do not speak the language. We need to create tools that help them spread the knowledge a little bit easier. A key focal point of our practical research is to create tools that holistically integrate with the curricula that the speakers are teaching so that they could go home and practice on their phone with AI, without the necessity of having a speaker with them. That’s a big benefit if you're shy—which most people are.

And what if you could talk to your smart lightbulbs and say, in Lakota or Cheyenne, "Turn on the house lights"? It would make that indigenous language part of your life, rather than the language of ceremony. That's what some of these languages have been relegated to—they’re no longer a day-to-day language except for a small pool of speakers. But if you're a language learner, one of the best things to do is be immersed in it using all the technology that surrounds you.

I mean, obviously, not everyone is going to do it. I don't anticipate that every indigenous person is going to be wanting to learn their language, because that's a personal choice. But making it easy and accessible—I think it's a big first step.

Are there other projects in tech that you’re really psyched about?

I'm really inspired by the work being done by my peers at the Native BioData Consortium, a collection of biomedical researchers working on indigenous genetic health research.

And as a VR geek, I’m thinking about what might be possible in that realm. Imagine going to Montana to the site of the Battle of Little Bighorn or Custer’s Last Stand, and putting on a XR headset and experiencing it from all the multiple universes and languages of the tribes involved: the Crow, the Cheyenne, Lakota, and Arapaho, really contextualizing what went on. What was the significance of this battle from all these literal viewpoints? We’re seeing the edges of this technology start to emerge. I’m hoping this research I’m doing will play a role in enabling that future.

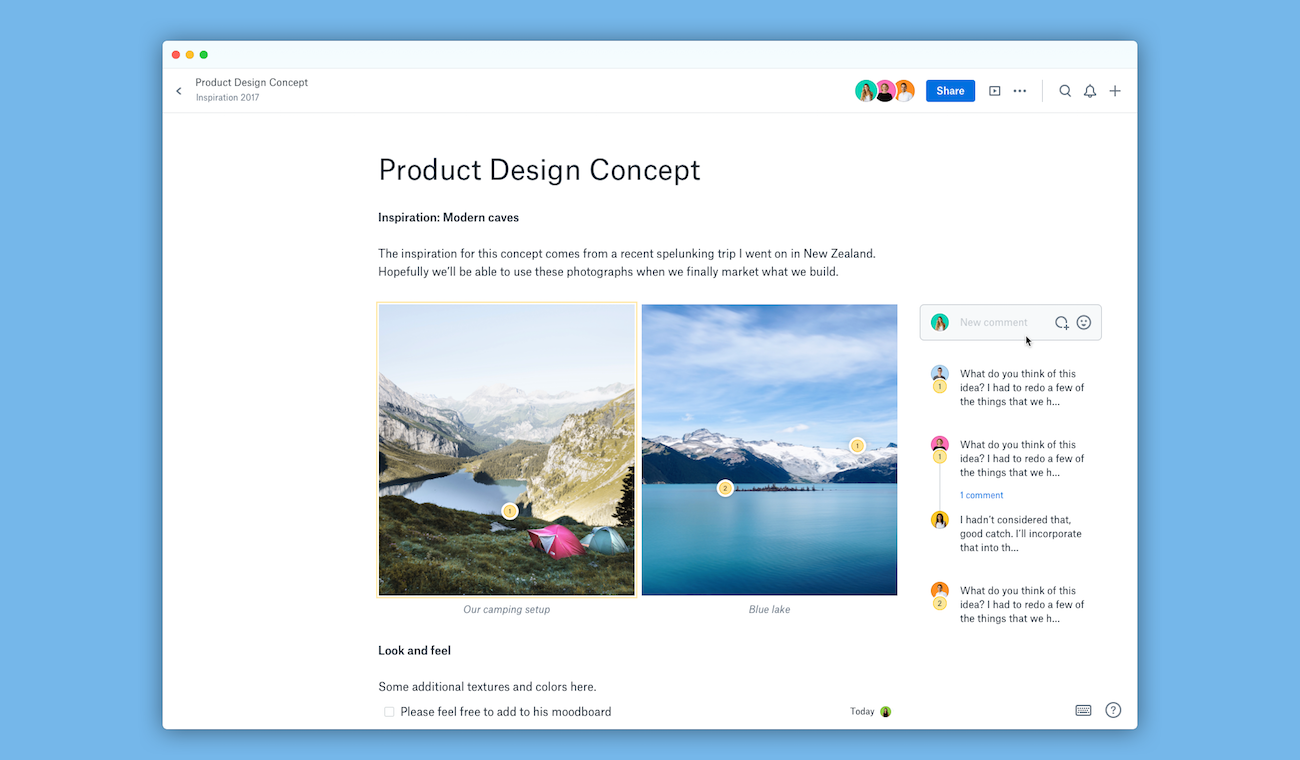

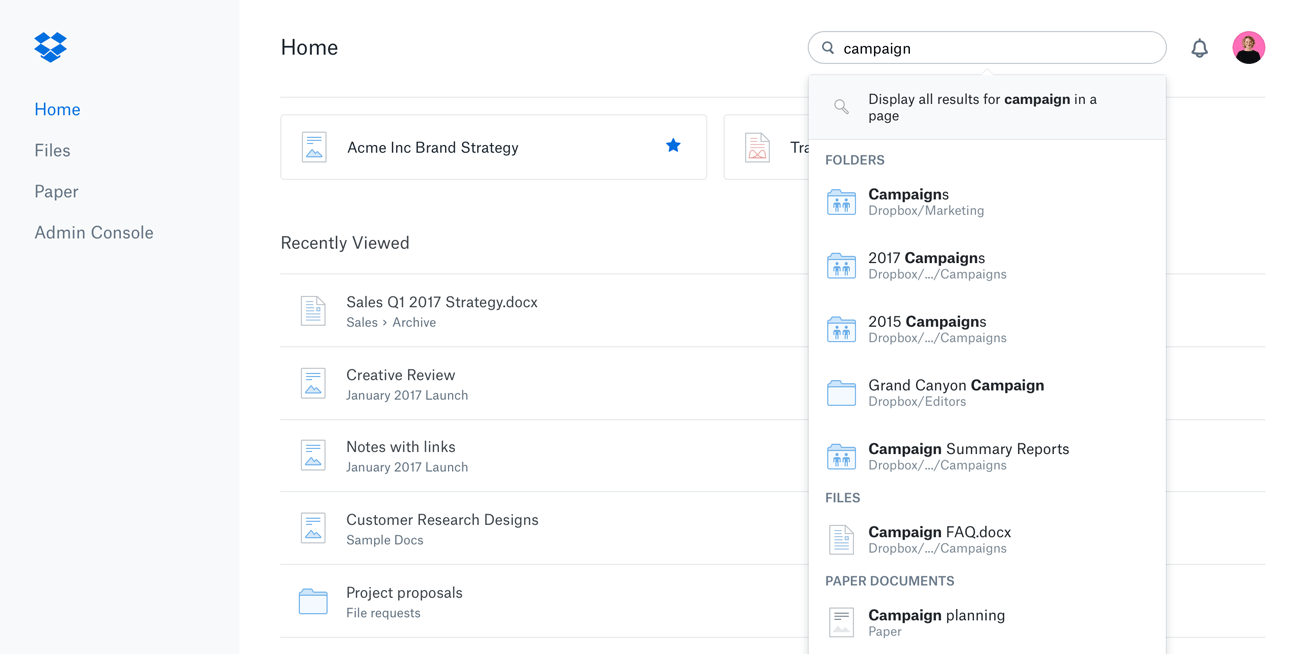

Dropbox Dash: The AI teammate that understands your work

Dropbox Dash: The AI teammate that understands your work

.png/_jcr_content/renditions/hero_square%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/hero_square%20(3).webp)

.png/_jcr_content/renditions/blog%20(1).webp)

.png/_jcr_content/renditions/hero%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/1080x1080%20(1).webp)

.gif)

.png)

.png)

.png)

.jpg)

.jpg)