Artificial intelligence is making huge waves in psychotherapy—and not just through the use of chatbots. One psychologist and researcher argues that AI might even teach your next therapist how to improve patient outcomes.

With clinical depression affecting nearly 20% of Americans, the urgent need for effective treatment is underscored by challenges in accessing mental health care. Many people lack health insurance. Others struggle to find an in-network provider. Not to mention the shortage of mental health care professionals nationwide.

To address these gaps, startups and practitioners are leveraging AI, as seen with Cedars-Sinai's recent AI-based VR talk therapy app. In it, Apple Vision Pro headset users can chat with an avatar “programmed to simulate a human therapist.”

A number of mental health startups have also been advertising AI for therapeutic use. Users are encouraged to treat chatbots like they would a real-life therapist: sharing thoughts and feelings and asking for strategies and advice on how they should be functioning in the day-to-day. Some people champion the bots for offering actionable steps to enhance their lives.

But critics of AI's application in mental health have raised concerns, including privacy issues, biases, and the risk of inadequate or harmful advice.

So is there a place for AI and new tech within the world of mental health? And is there a way to integrate AI into treatment responsibly? We consulted with Dr. Betsy Stade, a psychologist and AI researcher at the Stanford Institute for Human-Centered AI on ethical integration practices. “I want to empower other mental health practitioners to have a voice in this conversation,” says Stade.

At first glance, the combo of technology and mental health doesn’t feel like a natural fit. Can you talk to us about why that became your field of study?

I was always excited by the prospect of psychology research, a career pursuing empirical answers to questions like “How do people think?” and “Why do they think the way they do?”

When I started doing psychology, I was working in a hospital doing clinical trial research. A lot of the reactions that I was having were along the lines of “Why are we still using paper and pencil forms to collect information about people? Is there not a better way?” I started thinking about the application of technology in the field. Over the course of a decade, that evolved into using AI and large language models to measure and intervene on conditions like depression.

"I want to empower mental health practitioners to have a voice in this conversation." — Dr. Betsy Stade

When people think about AI and mental health, they immediately think chatbots. But your work considers ways AI could be applied beyond talk therapy.

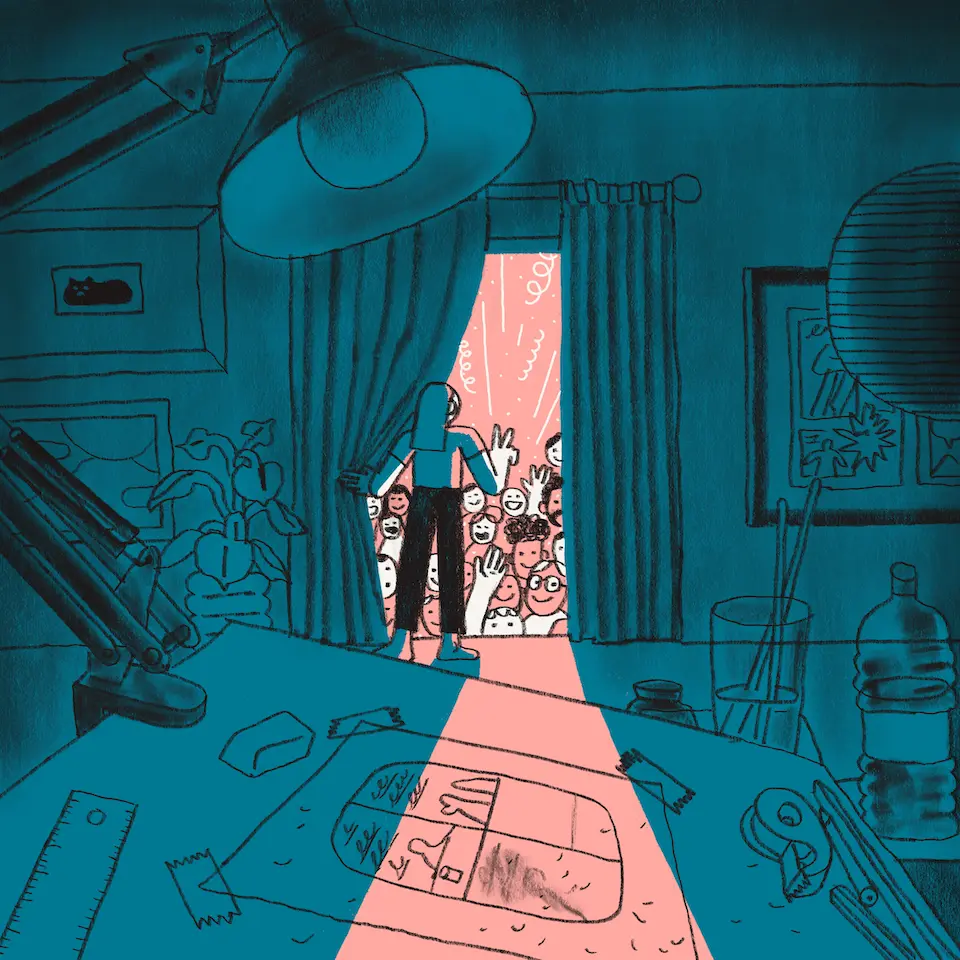

There is a science of psychotherapy. As clinical psychologists and clinical scientists, we study which therapies are more effective than others and what makes a therapy effective. We also tend to know that even when we have a therapy that's considered a gold standard treatment, the rates of uptake in the community are really poor. For example: we have two gold standard therapies for PTSD. They work really well. But very few therapists actually know how to do them. I think that with AI and technology, we can try and promote more evidence-based therapy practices.

I see a lot of promise to actually getting people the treatments we know work in a really scalable way, whether that's through helping make sure that more therapists are using evidence-based therapies, call-center treatments, or developing apps that are allowing people access to concrete and actionable tools that we have science to support.

There are people who seem to actually prefer chatting with robots rather than human therapists. Why do you think that is?

Anecdotally, I've heard that some individuals with autism spectrum disorder may have a preference for speaking with technology or interacting with technology, as opposed to a human. I've also heard anecdotes about people who would be very happy to see a human therapist, but time, money, or even availability of the therapist is getting in the way. We're running a study where we're trying to get some more concrete and empirical answers about the rates of people seeking out chatbot therapy and why they’re doing it.

One thing that I would add: there may be people who have a preference for chatbot therapy. Let's say a person has social anxiety disorder. Clinically speaking, we might not always want to follow the person's preferences. We have evidence that when socially anxious people avoid social situations, that helps keep the problem alive. It feeds the anxiety. We certainly want to take those things into account if we're talking about who uses chatbot therapy and who doesn't.

"With AI and technology, we can try and promote more evidence-based therapy practices."

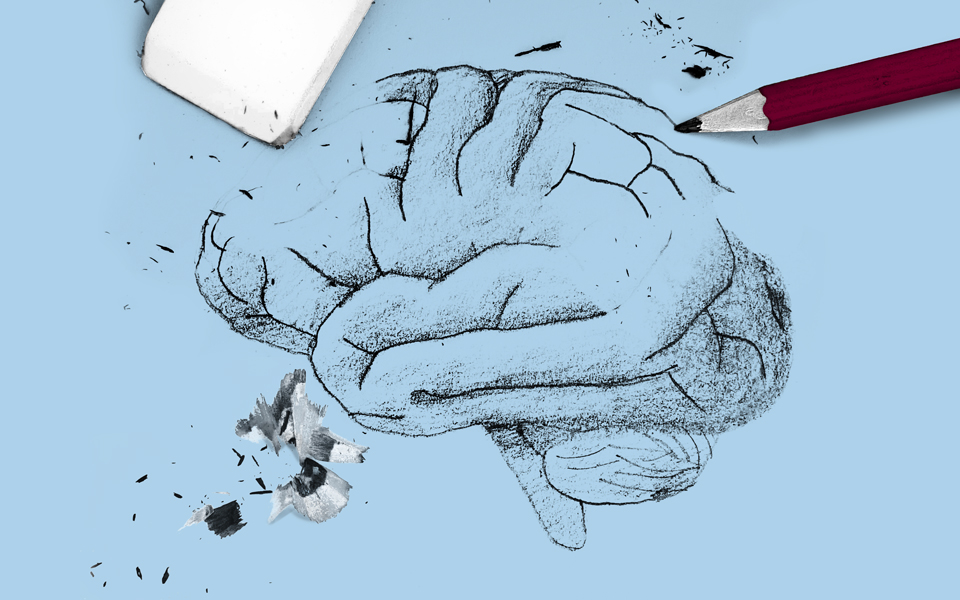

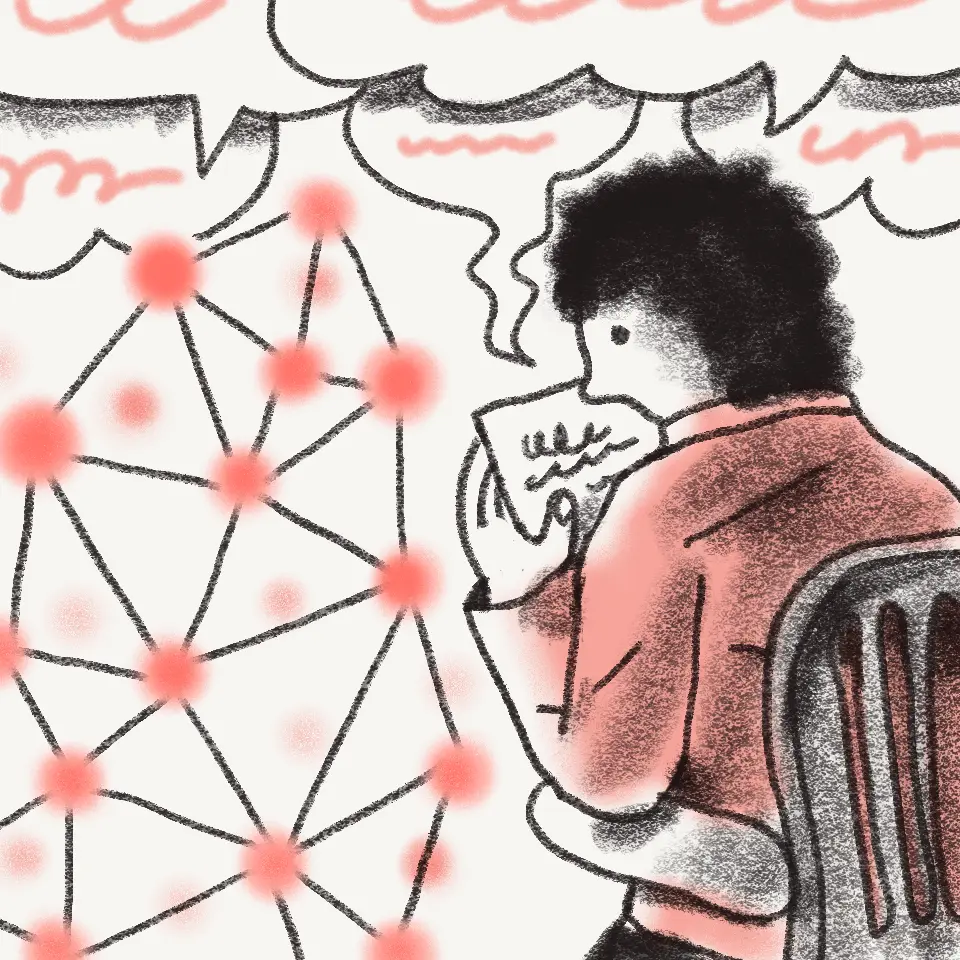

A concern I’ve had—and maybe it’s just my own fragile ego speaking—but I don’t trust a robot to be able to understand my issues. But I could see where it might be able to recognize or identify patterns.

We humans are incredibly complex. Emotions are complex. I would never say that one person can be boiled down to some sort of score. It is also true that we know that depression is a real thing. Even people with really mild levels of depression have their lives quite impacted. I'm not purporting that AI is ever going to understand and completely capture you as a human. That is a task for other humans. But are these tools that could assist us? One of the projects I'm working on now is actually thinking about a framework where we can evaluate whether AI mental health applications are safe and if they're ready to be deployed for clinical use.

We really want to advocate for caution. These are people's lives. We deal with really serious issues and topics in the realm of mental health.

I think one of the major concerns that people have with AI and therapy is that not everyone is going to be approaching it with the same care as you.

Well, I appreciate you saying that. And I will say that I think one factor is…I'm a clinically trained psychologist, and I'm in the process of getting licensed. And it's not typical for us to learn about AI. I also work with a lot of computer scientists. And it's not typical for computer scientists to get trained in mental health.

I really do believe that if more clinical psychologists, psychiatrists, and mental health practitioners had just a little bit more understanding and familiarity of how AI works, they could see its potential. On the flip side, I try to do the same with computer scientists. I do feel like most of the people I've worked with, and many of the companies that I've actually interacted with are taking these things very seriously. I'm cautiously optimistic. But I'm aware that we do need to stay alert and be concerned about the possibility of harm and things going wrong.

"If more mental health practitioners had just a little bit more understanding of how AI works, they could see its potential."

If you were designing the perfect use of AI in psychology spaces, what would that look like to you?

I'm very excited about the idea of using AI to help teach more therapists gold standard treatments. Right now, it's really expensive to get trained in them and accrue enough experience. It's also hard to give therapists feedback, because our field just tends to not have time for a supervisor to sit in and watch an entire session of a new therapist doing a treatment with a patient. The promise of AI to teach, provide feedback, and help with skill acquisition is really exciting to me.

The thing that I ultimately care about is more people having access to the treatments that work.

This interview has been edited and condensed for clarity.

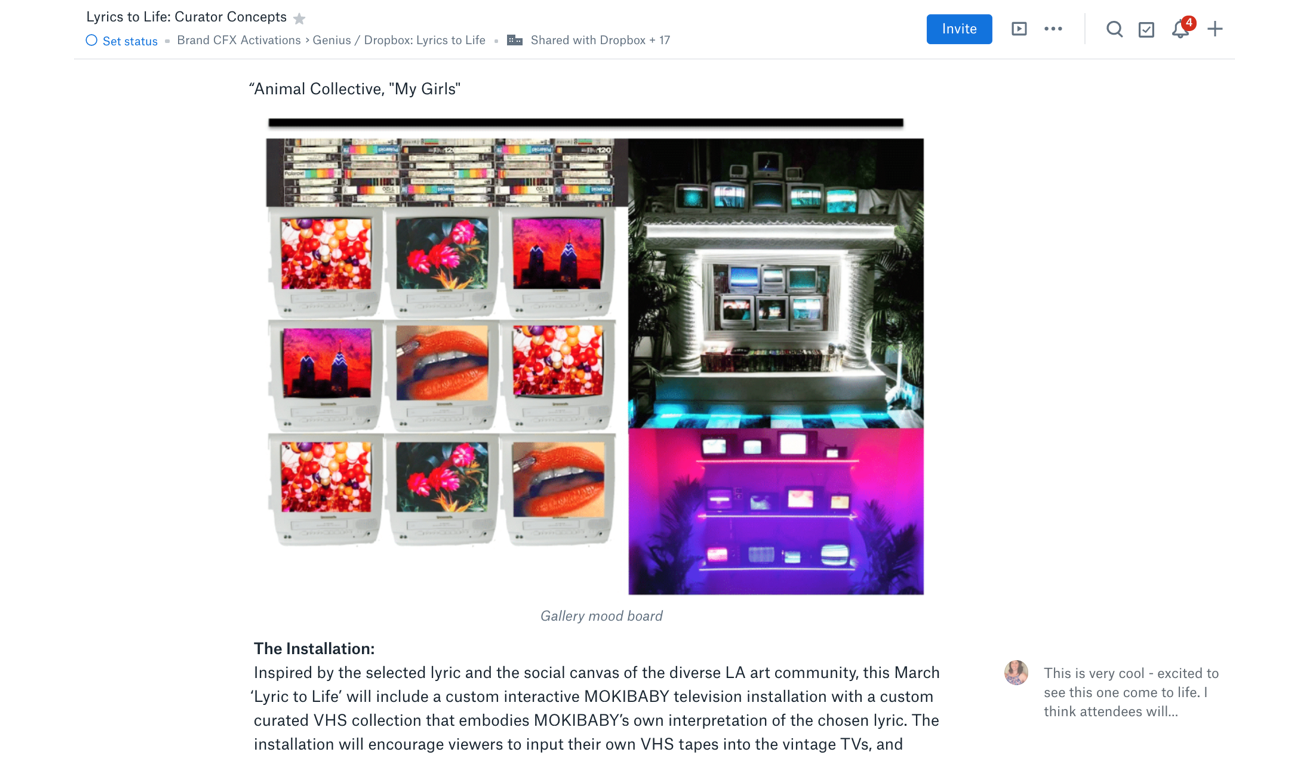

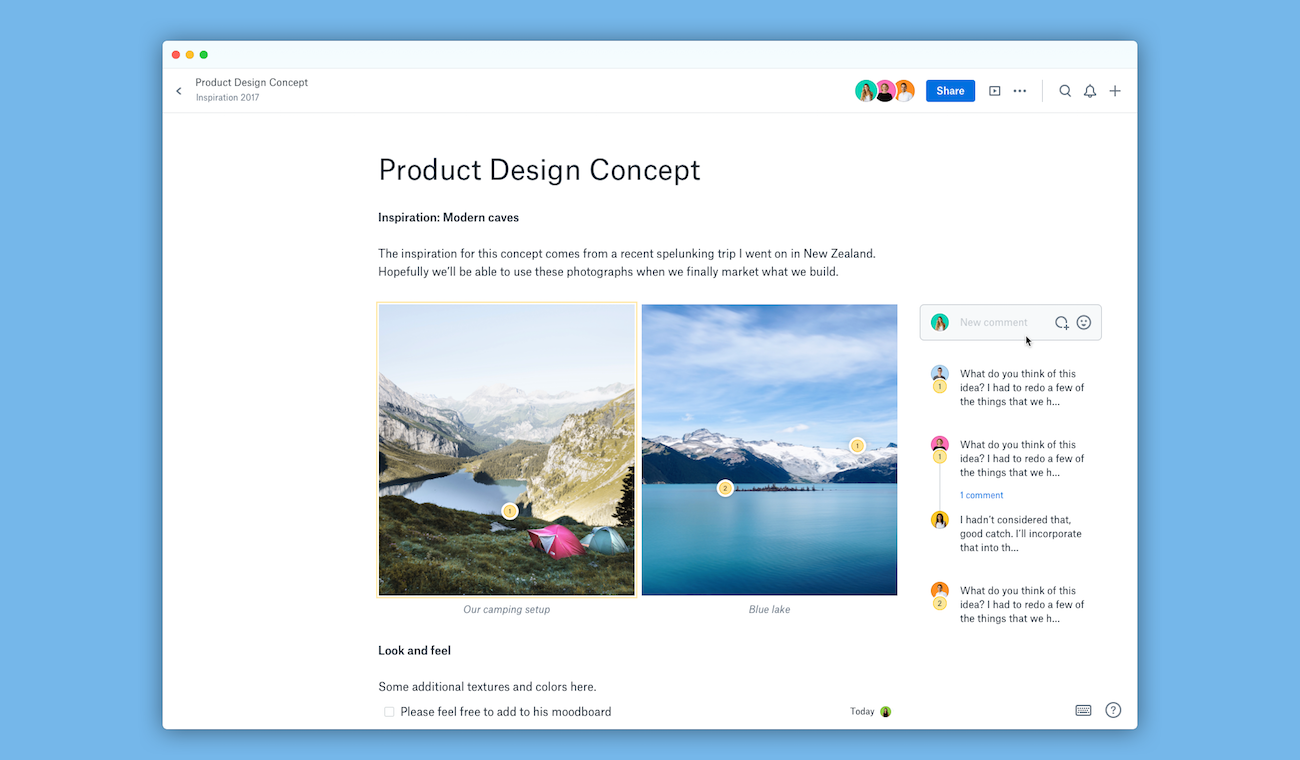

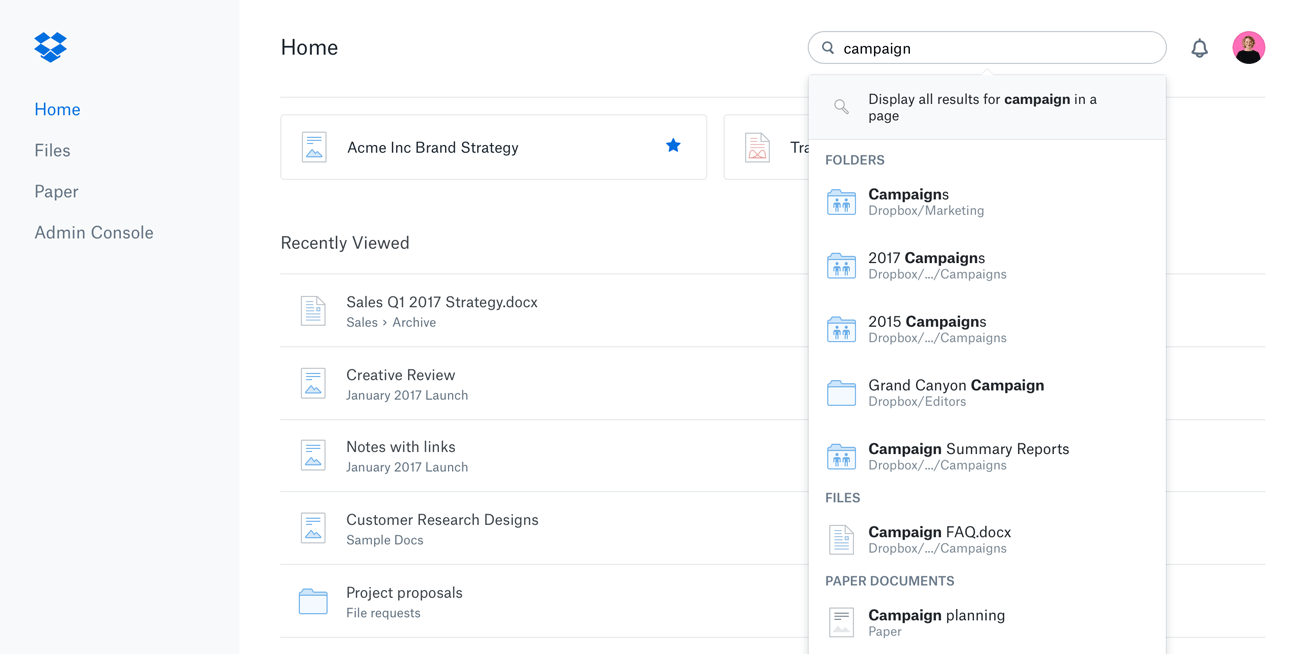

Dropbox Dash: The AI teammate that understands your work

Dropbox Dash: The AI teammate that understands your work

.png/_jcr_content/renditions/hero_square%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/hero_square%20(3).webp)

.png/_jcr_content/renditions/blog%20(1).webp)

.png/_jcr_content/renditions/hero%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/1080x1080%20(1).webp)

.gif)

.png)

.png)

.png)

.jpg)

.jpg)