An “empathy-as-service” tool is using artificial intelligence to flag poor workplace communications in the hopes of creating a more empathic workplace.

If social media is a barometer of what’s going on in our culture, then it looks like we’re in the midst of an overdue war on exploitative work culture.

My timelines now feature memes, videos, photos, and captions calling out any employer—or sometimes just the idea of one—that bases a person’s worth solely on their productivity. (Perhaps it’s a response to the Great Resignation, the steady wave of millions of Americans opting out of the workforce every month since the pandemic began.)

In fact, “I quit!” stories have become a whole new content category online. One of the biggest hubs for them is the r/antiwork subreddit. Created to discuss ways people can work less or not at all, it’s become more well-known for viral resignation-by-text screenshots—like this one from a boss who threatened their now ex-employee’s health insurance if they didn’t work on their day off. The subreddit has blown up to 1.3 million members known as “idlers” who support each other as they navigate workplaces that under and/or de-value them.

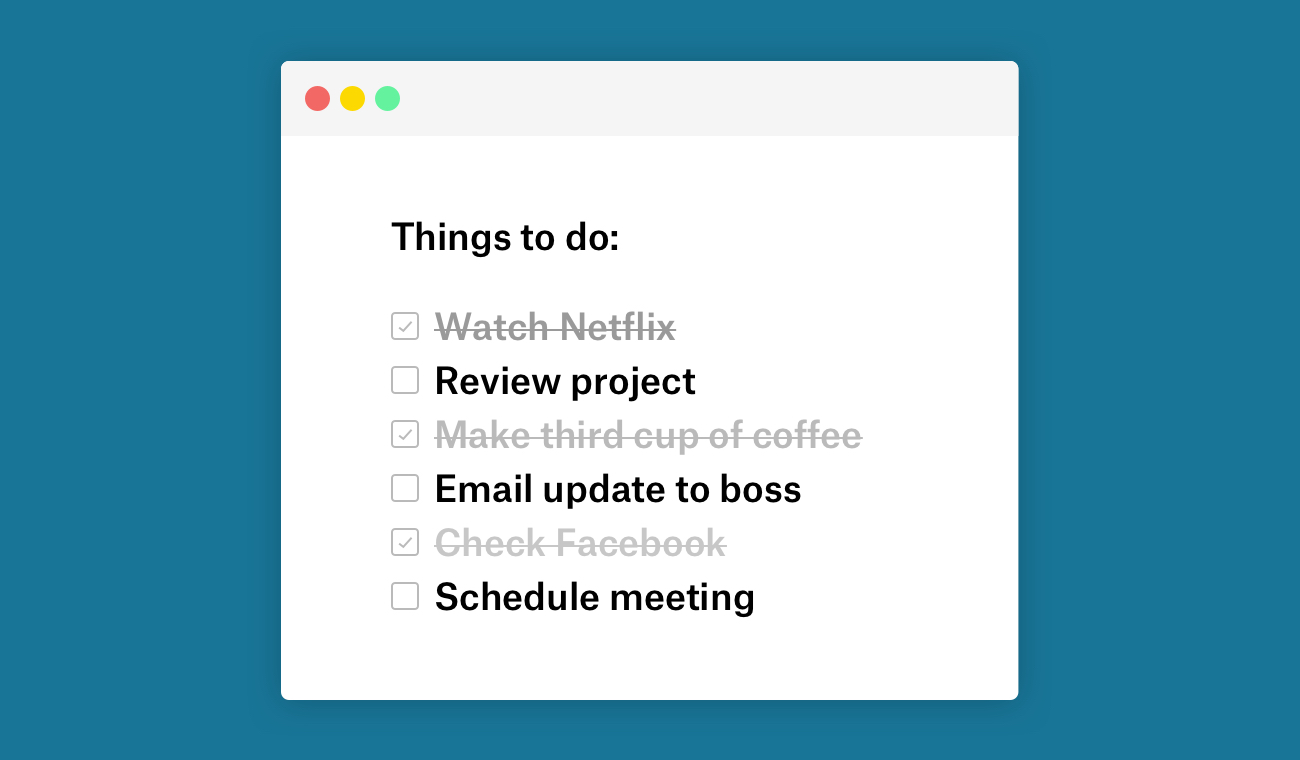

The thing that stands out the most when scrolling through r/antiwork and reading one terrible text exchange after another is the way these managers speak to their direct reports. These aren’t conversations; it’s clear they only want to hear “yes, sir” or “yes, ma’am.”

It would be easy to write off these toxic exchanges as extraordinary if it weren’t for the immediate flashes of recognition. While it may not have ended in “I quit!”, many of us have received an email or Slack message like the ones that typically start off these viral exchanges. Someone at work telling you to do something rather than asking. Or someone asking a question in a way that intimates a failure on your part and in a tone that doesn’t seem to register that you’re actually a whole human being with feelings.

At the core of these types of messages is a lack of empathy. As a voyeur, you can tell that both parties don’t feel heard or understood. The screenshots we see on r/antiwork are the inevitable blow ups after one unempathetic exchange too many.

Teaching AI empathy to help humans people better

One only needs to look at the state of the world to know that empathy has been on the decline for years. And not just anecdotally—a study published in Personality and Social Psychology Review in 2011 found that empathy levels amongst American college students fell by 48% between 1979 and 2009. (I doubt there’s been significant gains in empathy in the 10+ years since the study’s results.)

To become a more empathetic society, we’d need larger initiatives based in community building and government resources, says licensed psychologist Grin Lord. But when it comes to approaching the decline at work, she believes tapping into ethical artificial intelligence could help bridge “the empathy gap.”

...Only 25% of employees 'believe empathy in their organizations is sufficient...'

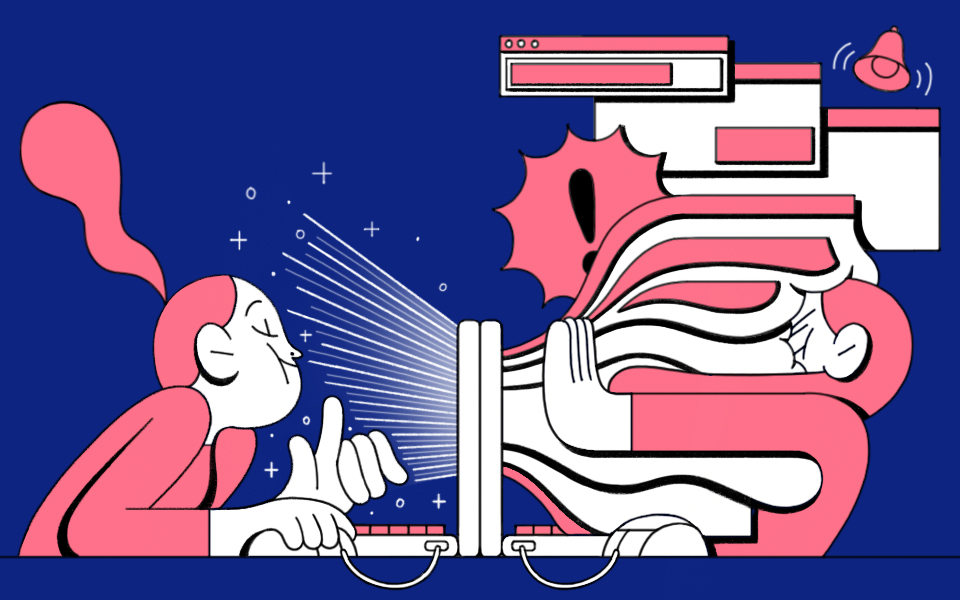

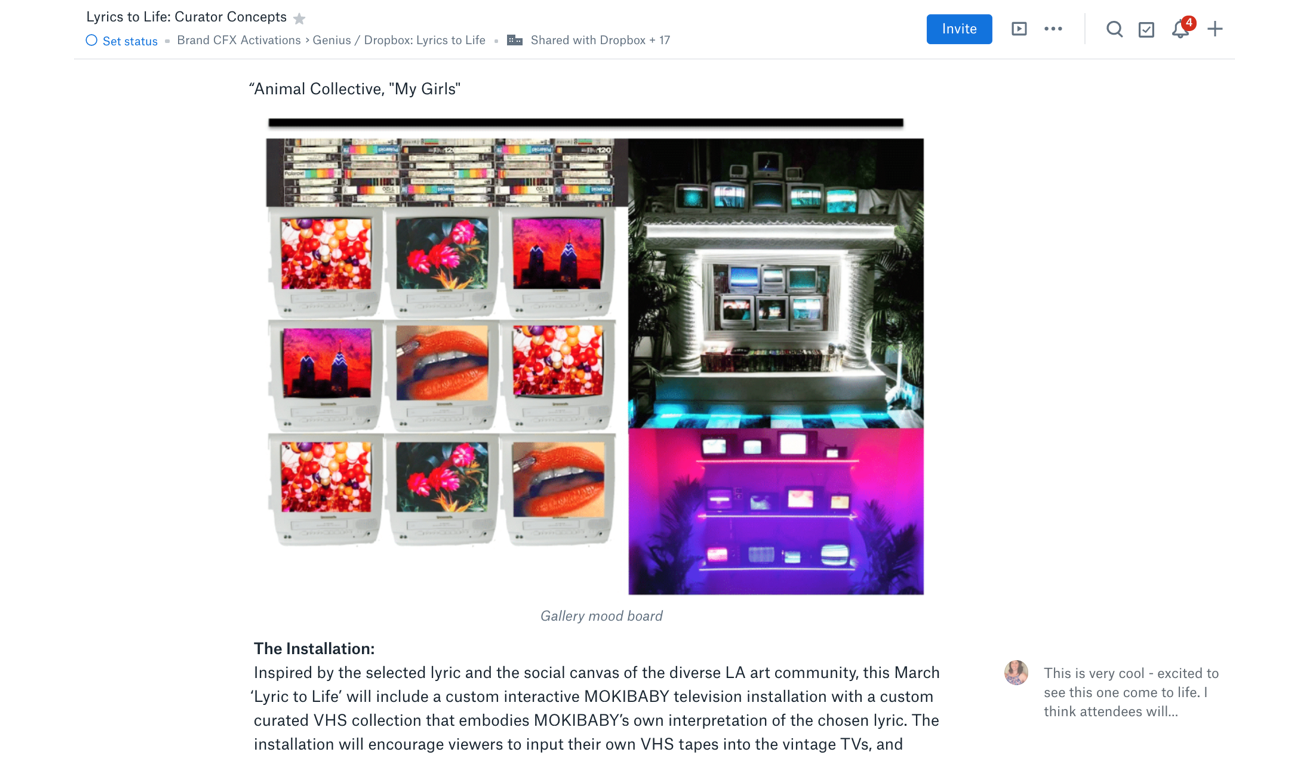

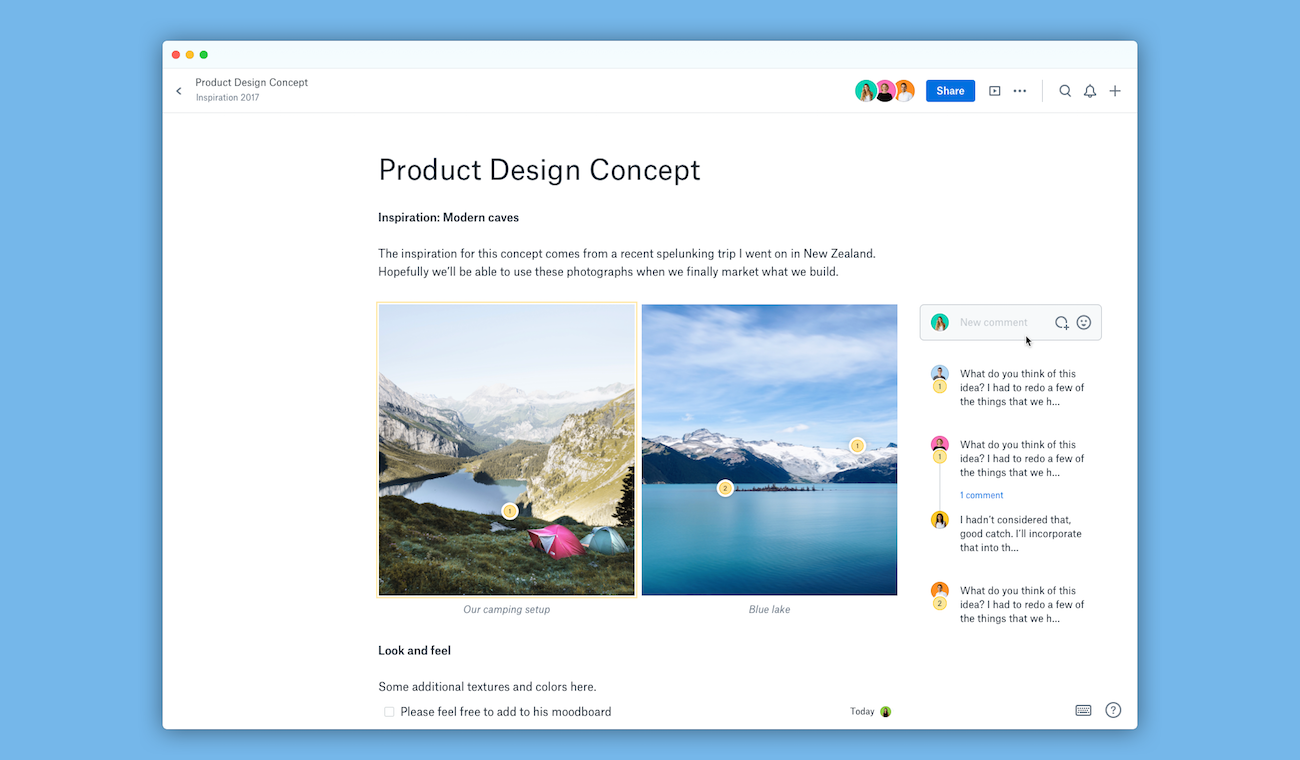

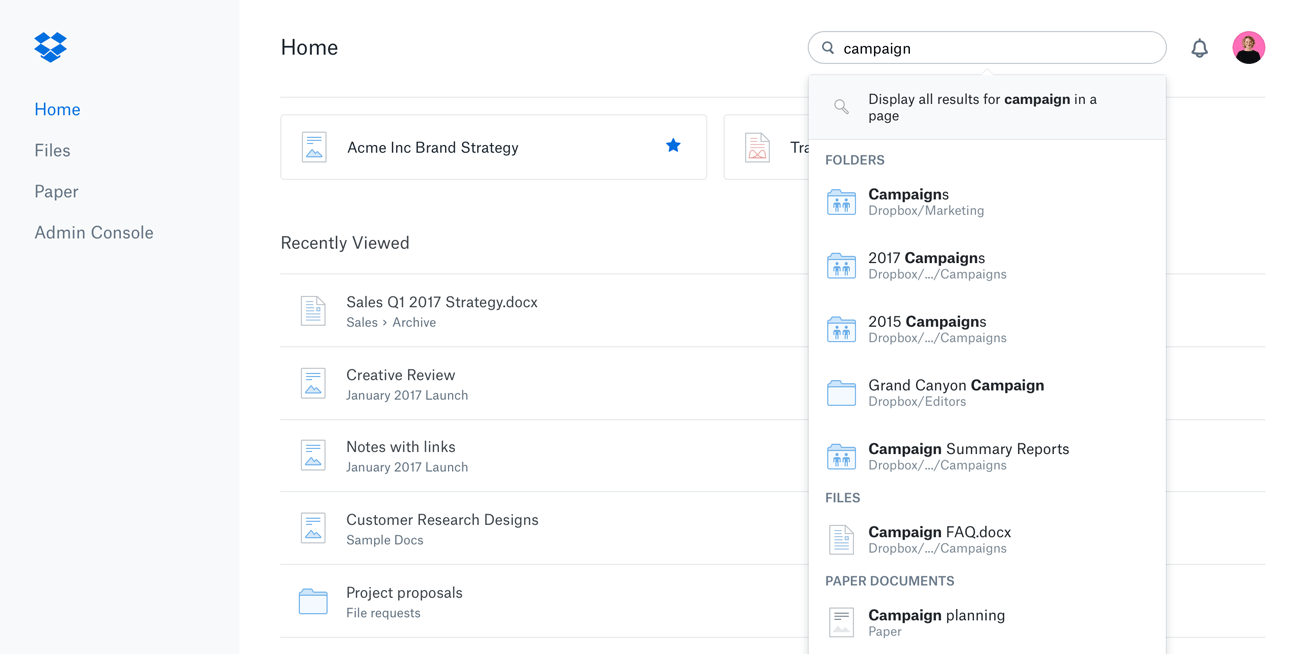

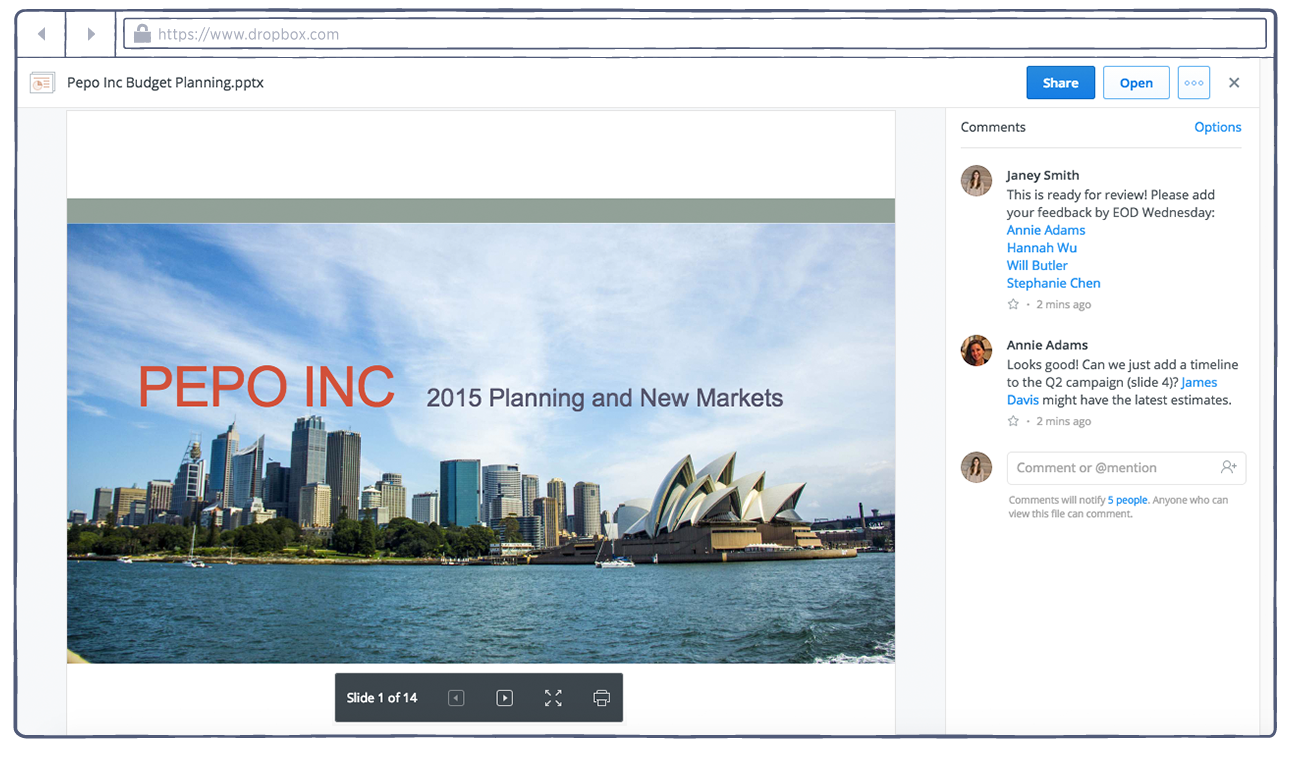

Lord is the founder of mpathic, a back-end integration for enterprise platforms that acts sort of like “Grammarly for empathy.” It offers real-time corrections to your messages before you hit send, whether that’s via Facebook messenger, email, or Slack. (They’re working on text messages and have speech support on their roadmap.)

Using AI, mpathic highlights the potentially offending text in question and offers suggestions and behavioral advice in a pop-up window. “U ready to present to execs tomorrow?” became “How do you feel about the presentation tomorrow?” in one demo.

Improving the way we communicate with each other at work could also help with workplace dissatisfaction. In a 2020 Society for Human Resource Management survey, 84% of the American workers they surveyed said “poorly trained people managers create a lot of unnecessary work and stress.” And the number one skill those surveyed said people managers needed to improve was “communicating effectively,” with 41% of participants considering it “the most important [skill] managers should develop.

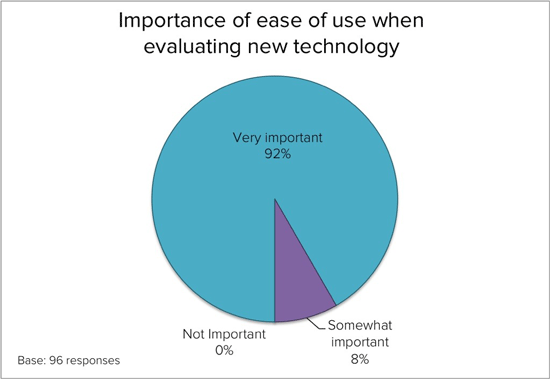

Showing empathy is a key to positive communication. Yet only 25% of employees “believe empathy in their organizations is sufficient,” according to a 2021 survey on the state of workplace empathy by business management consultancy businessolver. (They define empathy as “the ability to understand and experience the feelings of another.)

According to Lord, sometimes AI can be better suited than humans at noticing and correcting these low-empathy moments before they snowball and contribute to low employee morale (or go viral).

mpathic’s suggestions are based on Lord’s 15 years of research. Lord and her team curate and source data for its model through Empathy Rocks (another arm of her company that uses games to train therapists in empathy) and by working with diverse organizations and communities around the United States. It’s an effort informed by an ongoing call for ethical AI that ameliorates bias, whether that’s seen in the data itself or through humans’ interpretation of the data. By broadening the model’s training data to include diverse voices, Lord hopes to reduce bias in their models so they “can assess if we’re starting to overcorrect a particular group.”

“What AI is good at with empathy is rules,” Lord said. “Like saying, ‘I’ve seen all this stuff. And I am not a human—I don’t have this particular bias or context; I only have what you feed me.”

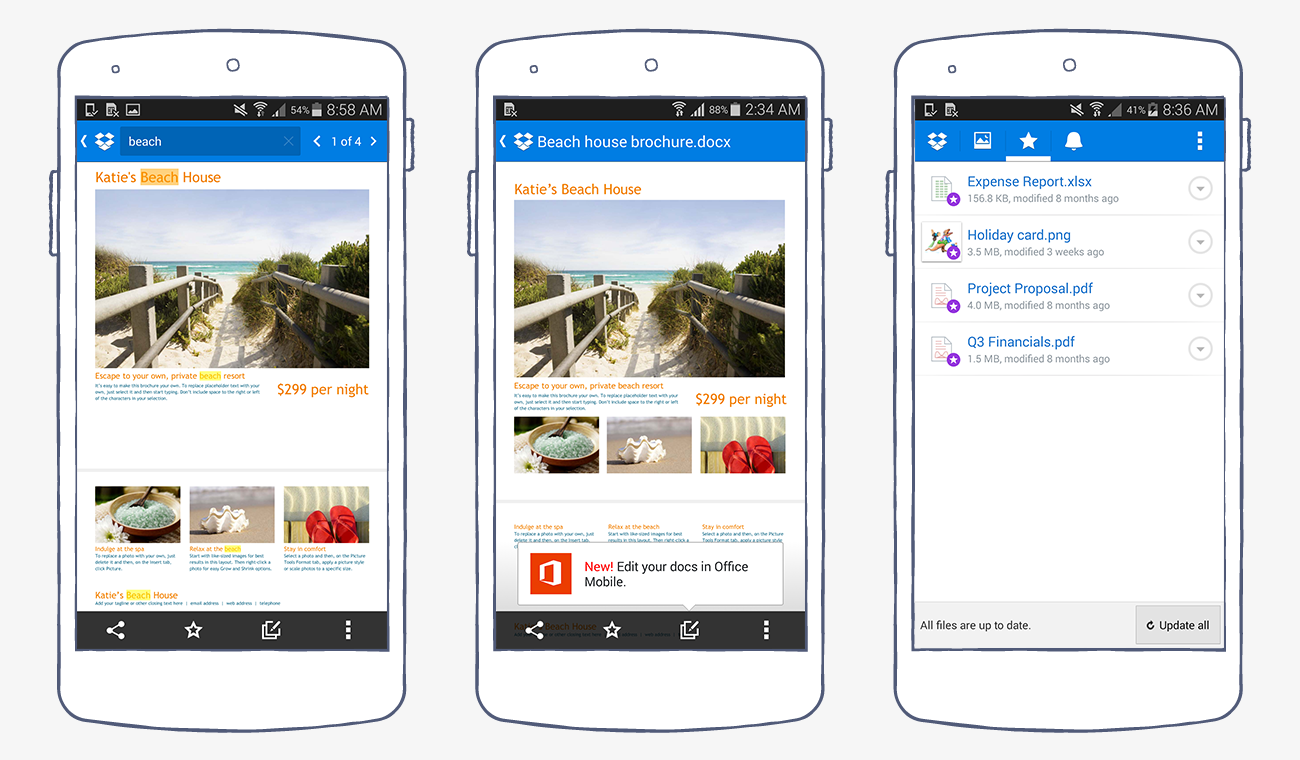

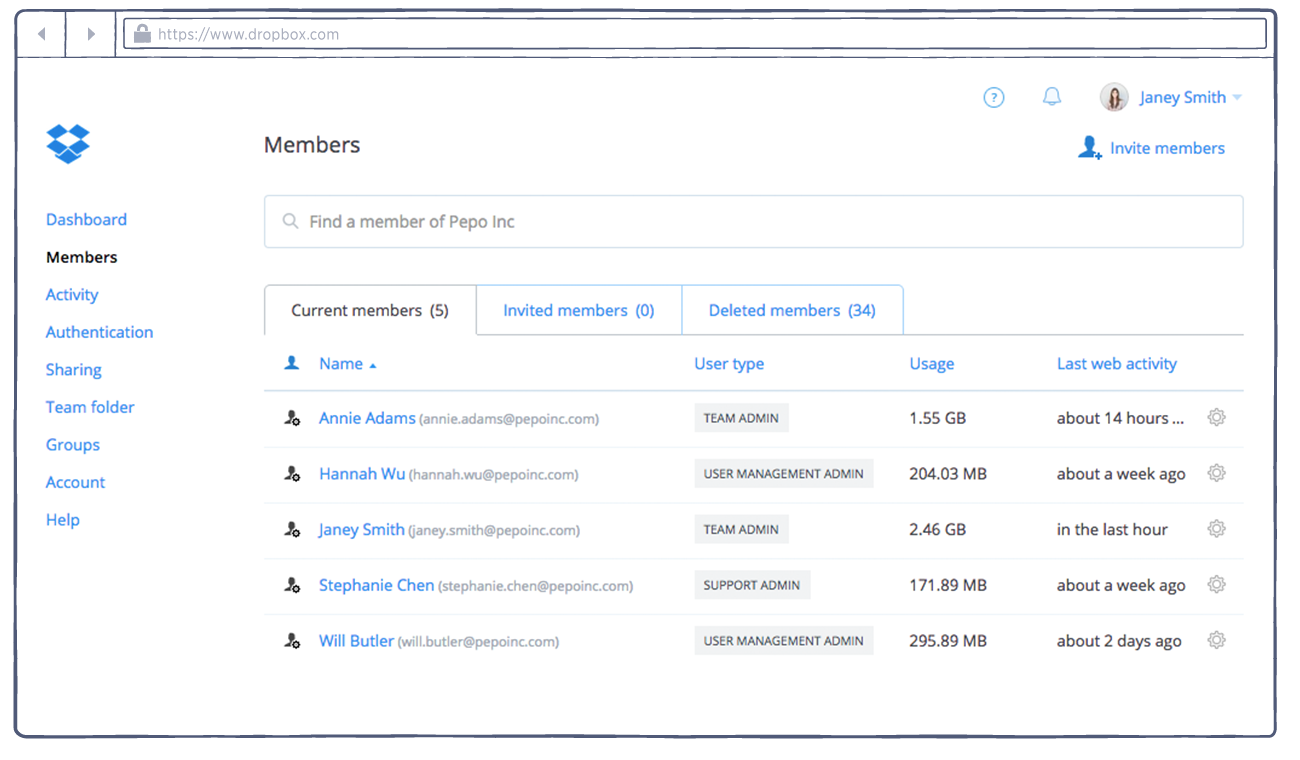

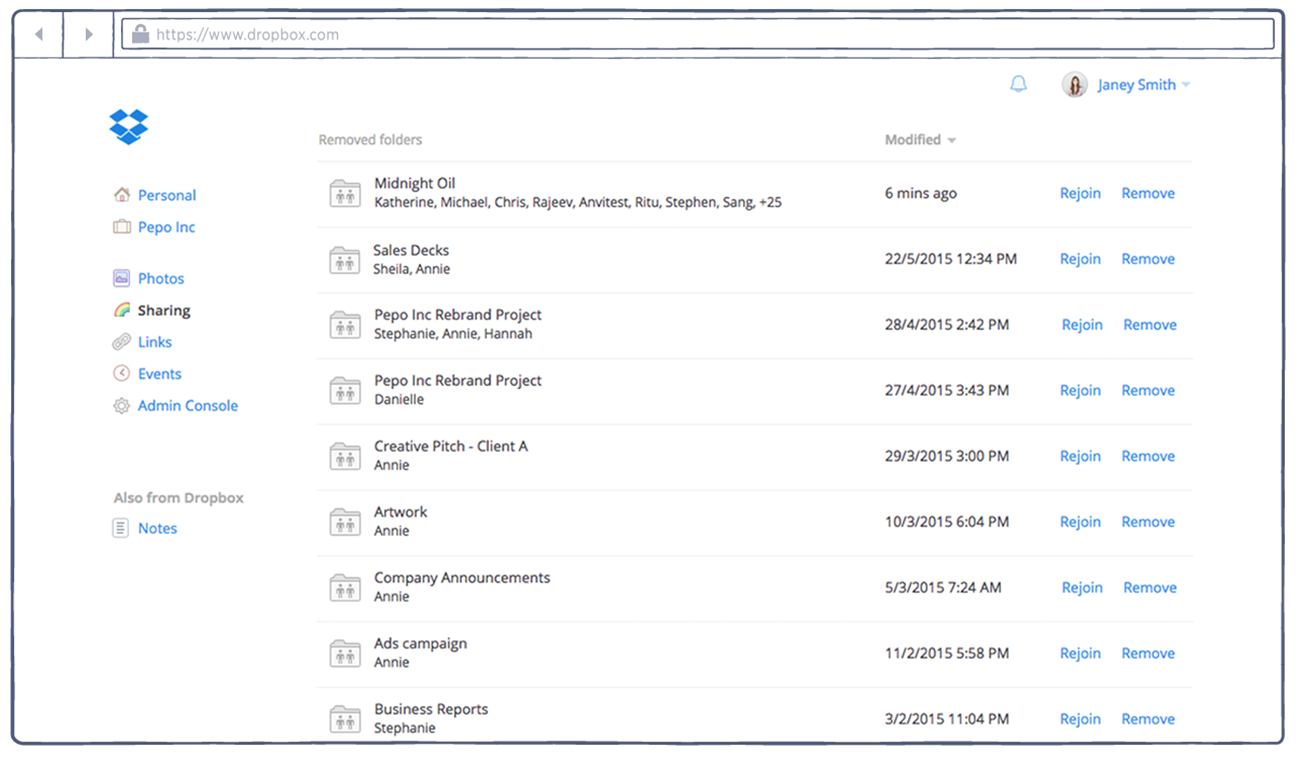

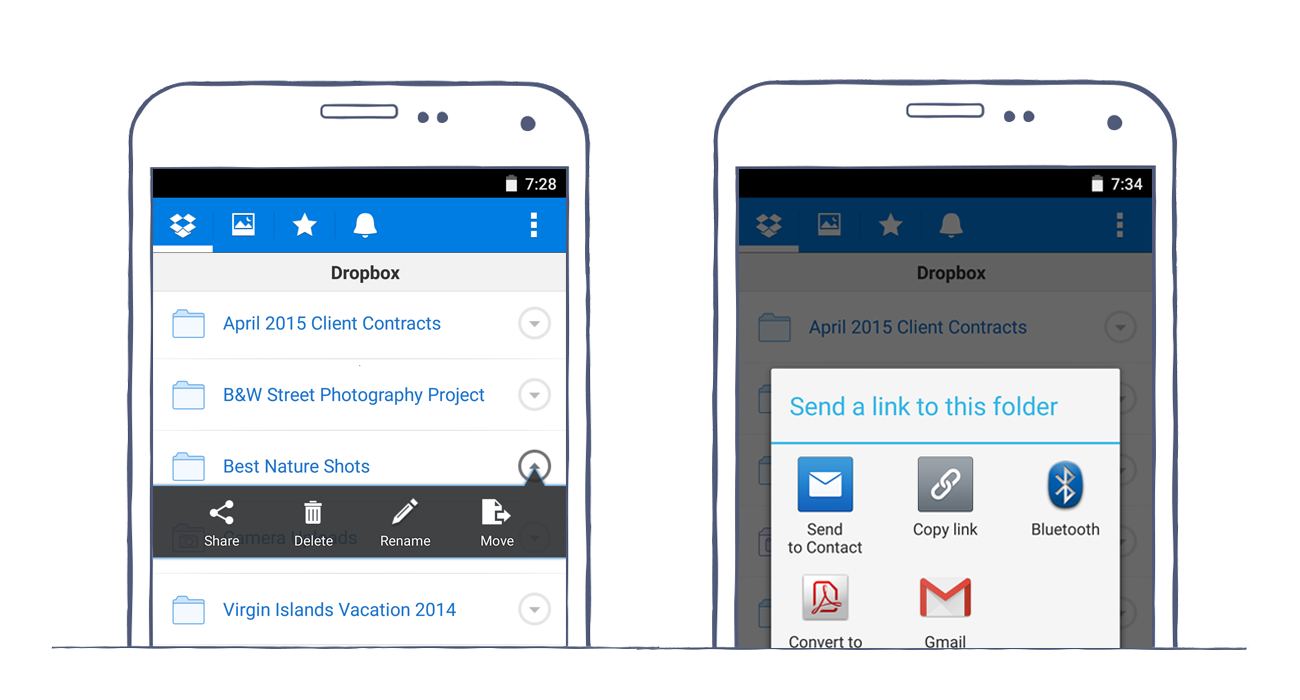

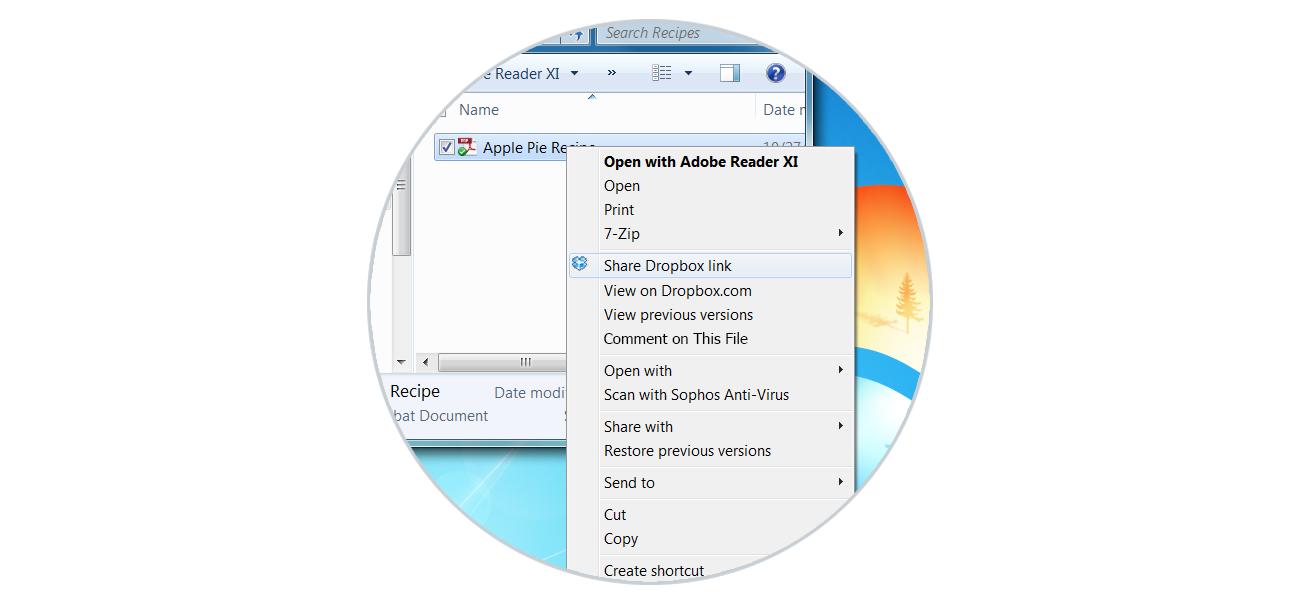

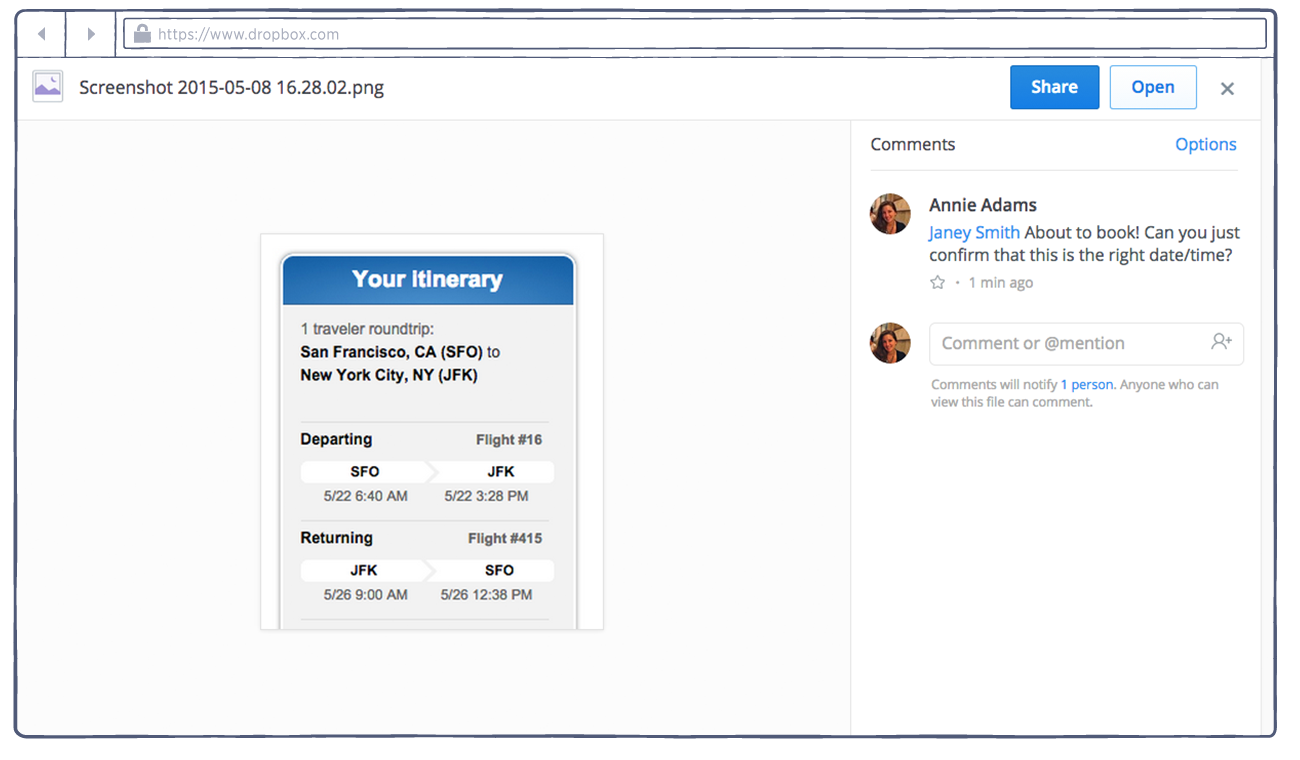

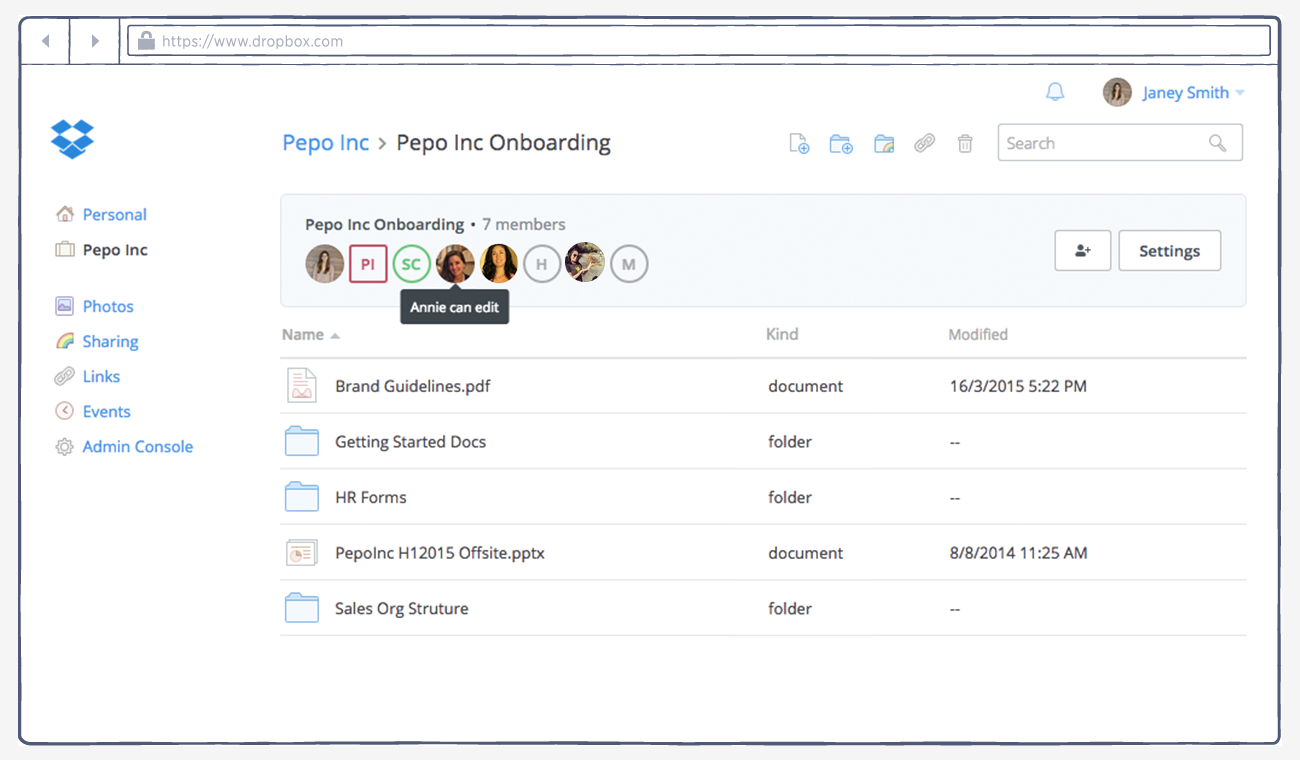

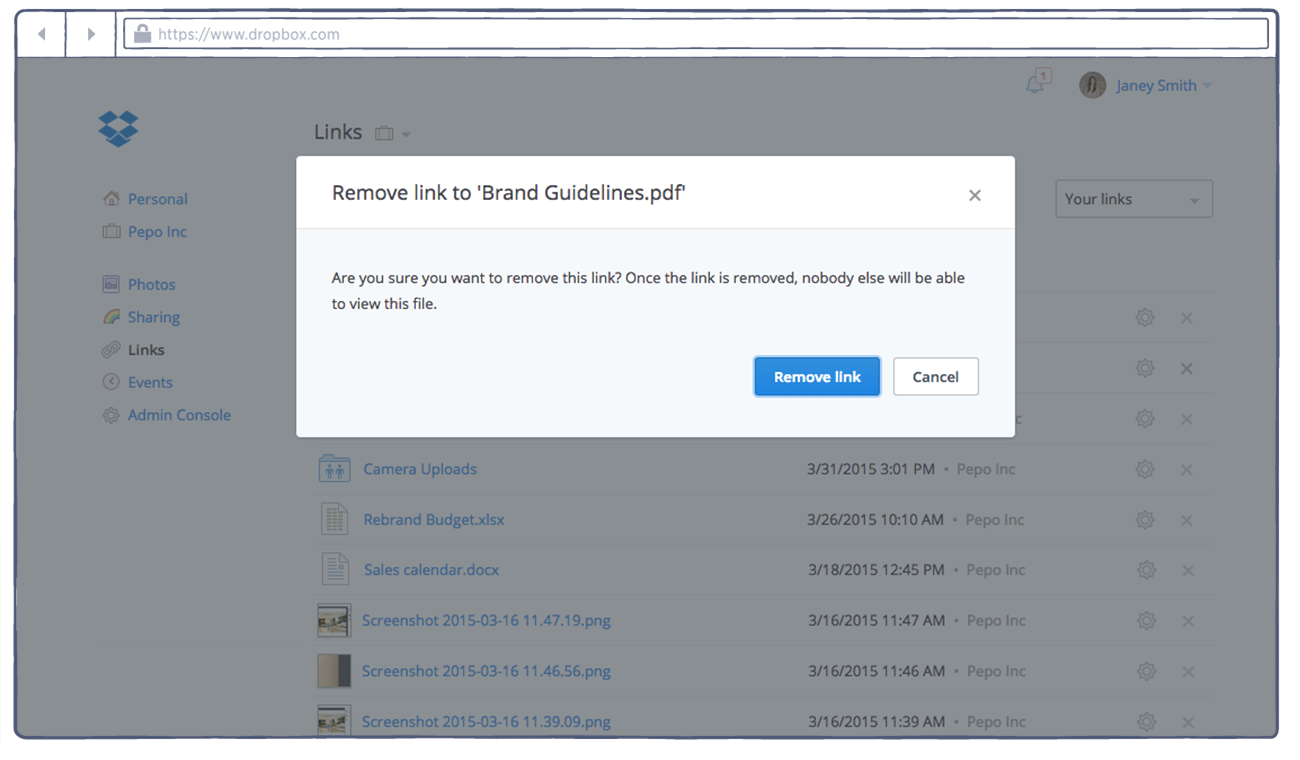

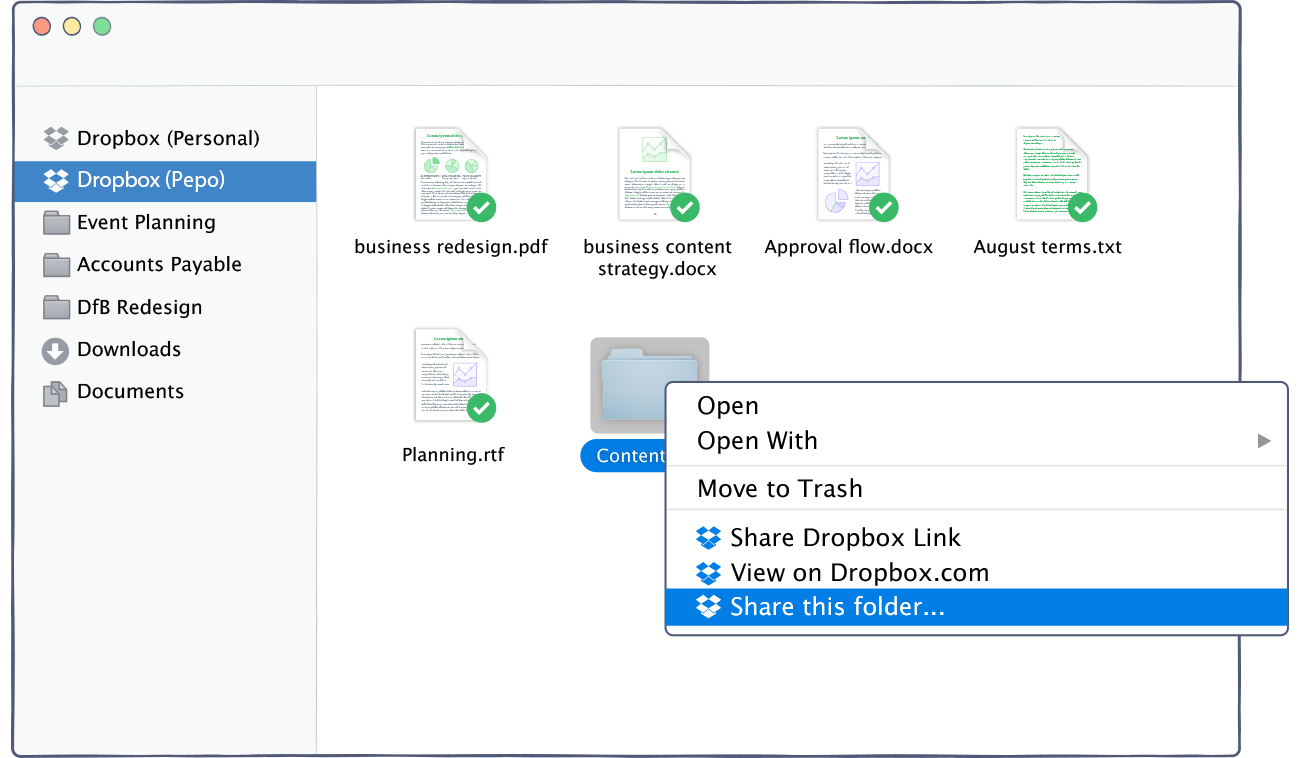

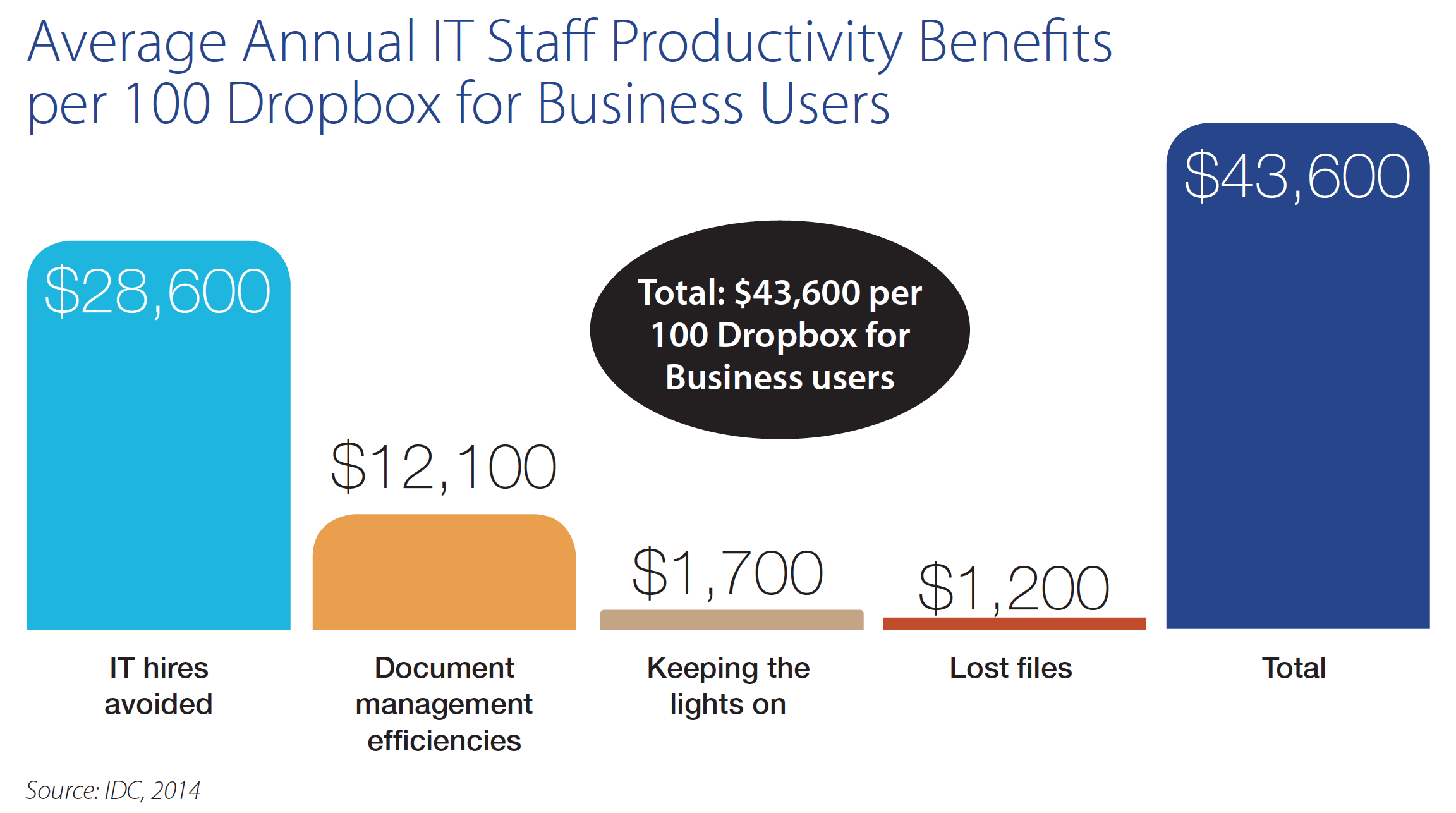

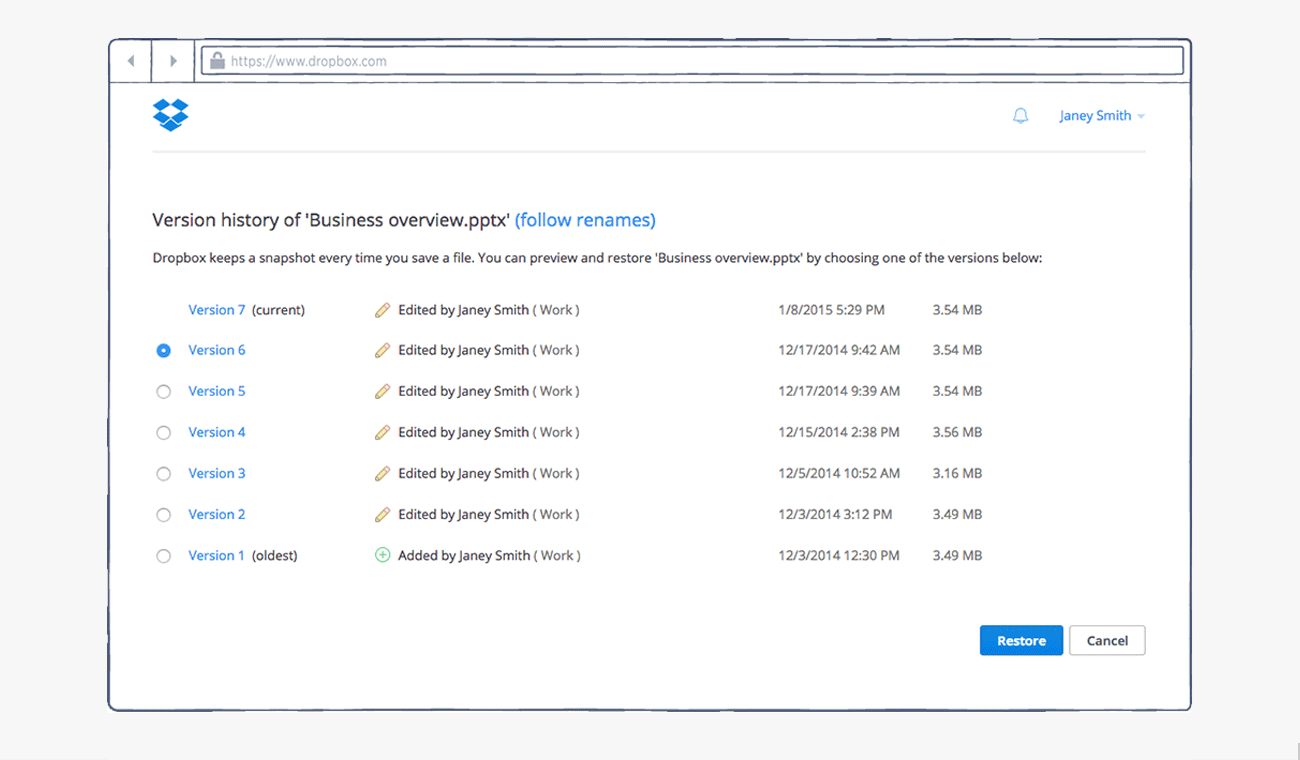

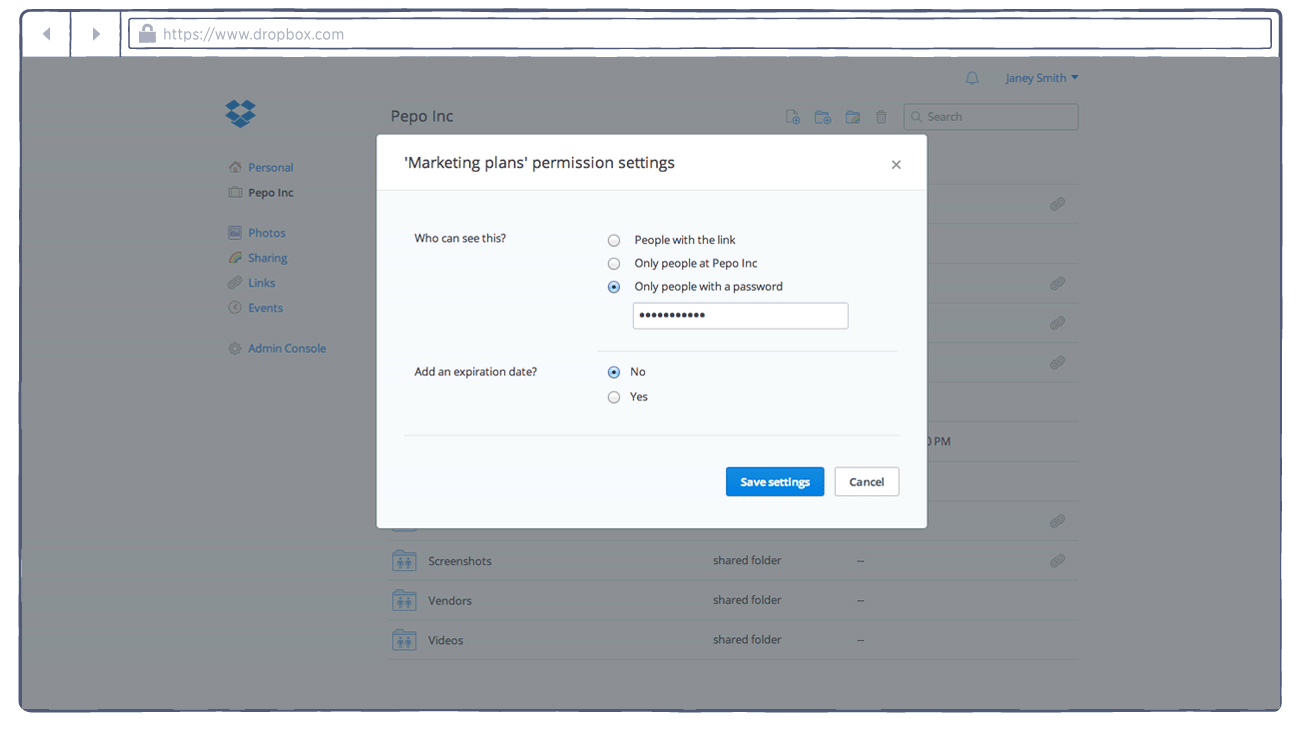

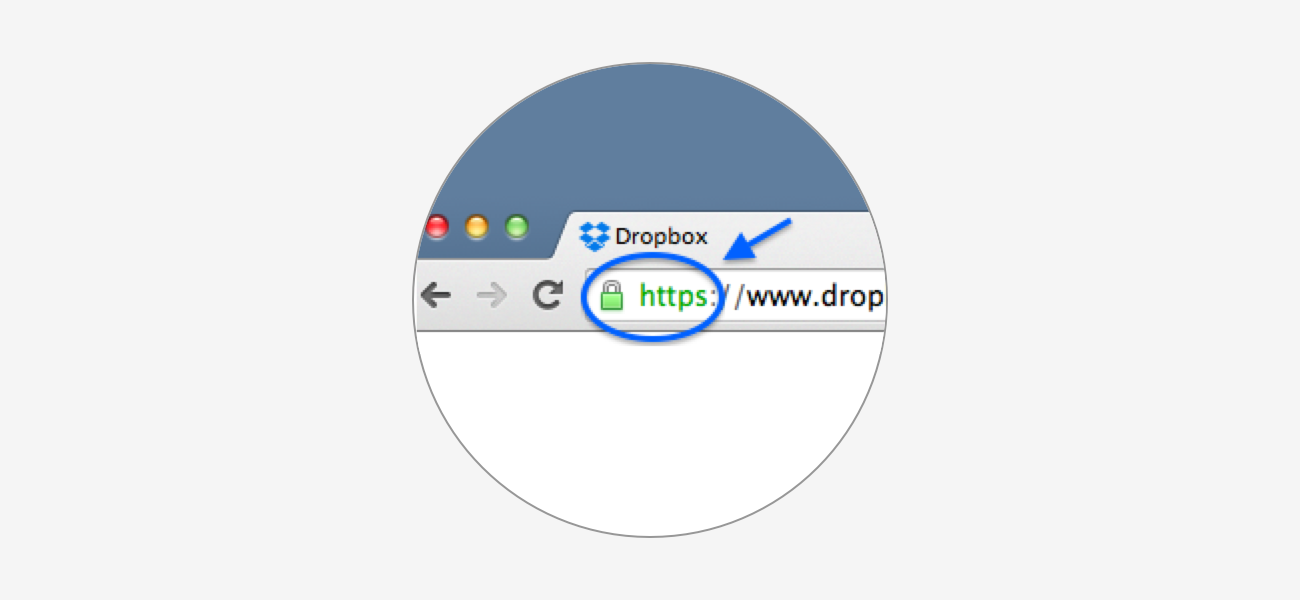

What are some of those rules? mpathic’s technology has detected 50+ types of corrections. (They’ve sent out a patent application for the tech, using Dropbox and HelloSign to submit it.) A few of these rules may sound familiar, but just because we know them, doesn’t mean they’re used as often as they should.

Using reflective listening and being able to repeat back and summarize what the other person said is one. Replacing closed questions (that read more like statements than actual questions) with open-ended ones that invite collaboration and curiosity is another.

Sometimes mpathic will prompt you to ask for ideas from the person you’re speaking to, or ask for their permission before offering your two cents, which creates an opportunity for collaboration rather than confrontation. In the “I quit!” text where the boss wrote “This type of behavior isn’t going to get you anywhere here,” mpathic suggested “What would help you to succeed in this environment?” This sentence’s transformation acknowledges that there are things that just have to get done at work, and opens the conversation up to other ideas of how that can happen.

... It’s better to think of empathy as accurately understanding someone, and having that person confirm that they were understood.

Using reflective listening and being able to repeat back and summarize what the other person said is one. Replacing closed questions (that read more like statements than actual questions) with open-ended ones that invite collaboration and curiosity is another.

Sometimes mpathic will prompt you to ask for ideas from the person you’re speaking to, or ask for their permission before offering your two cents, which creates an opportunity for collaboration rather than confrontation. In the “I quit!” text where the boss wrote “This type of behavior isn’t going to get you anywhere here,” mpathic suggested “What would help you to succeed in this environment?” This sentence’s transformation acknowledges that there are things that just have to get done at work, and opens the conversation up to other ideas of how that can happen.

And anyone who has gone to couples therapy to bring more empathy into your relationship will know this one: using “I” statements can feel less confrontational than “You” statements.

“mpathic can detect problems in language that aren’t necessarily toxic, but that definitely … deplete people [in conversations],” Lord adds. “Things like starting a sentence with ‘No offense’ or ‘Well, actually…’ or ending a sentence with ‘Sorry if you felt that way.’”

AI as an empathy ‘autotune’

But is following an AI’s suggestions really being empathic? Well, before we can tackle that question, we have to realize that most people’s working definition of empathy needs an update. Research has undone the notion that empathy means being able to walk a mile in someone else’s shoes, an idea that gained popularity in the ’80s, Lord says. That concept might actually have the opposite effect, explains Amber Jolley-Paige, mpathic’s clinical empathy designer.

“It could create a sense of distance, or a sense of fear,” she says. “[Some] people might be thinking, ‘Well, I’ll never be able to fully understand what that person is going through, so I’m not going to try.’”

Instead, it’s better to think of empathy as accurately understanding someone, and having that person confirm that they were understood.

“That doesn’t necessarily mean that we’re going to come to a resolution,” Jolley-Paige says. “But it is important [to have] that understanding, and letting that person know that they are seen and that they are validated. That in and of itself can go a long way to creating that foundation for working through miscommunications or potential conflict.”

That criteria for understanding is necessary in all kinds of communication, but it feels especially important—and harder to come by—while trying to work “in these times.” Working virtually requires taking extra steps to ensure that we understand or that we’re understood. In the before times, that sometimes looked like what’s known as “synchrony,” Lord explains, or how an empathetic person tends to match the vocal tones of others, sometimes down to the way they construct their sentences. It’s that “Yes! This person gets it!” moment that happens when we truly feel seen.

Working virtually requires taking extra steps to ensure that we understand or that we’re understood.

When researchers analyzed who gets high empathy ratings in counseling sessions, “we find it’s not because they use the most skills,” Lord says. “It’s because they really were invested in wanting to understand this other person, and start[ed] to autotune to them.”

There are way more barriers to that autotuning in remote work, including the loss of context that can happen when using digital communication tools. We’re in autopilot, just trying to do the best that we can in still fairly new circumstances.

“It’s very difficult to get out of our ways that we normally react, and [to] start to [think], ‘Well, what if I were to understand this person and really try to understand where they’re coming from in this moment, rather than convince them of my point?’” Lord says. With mpathic, she adds, “we can help people autotune to each other.”

Applying AI’s rules IRL

Humans need that kind of help. While a 2020 businessolver survey on workplace empathy found that 73% of employees and 80% of CEOs believed empathy could be taught, science hasn’t backed that up. Research trials focused on trying to teach therapists found that the lessons don’t stick. While workshops helped participants learn more about empathy as a concept, their ability to actually use those skills was a different story.

“Within days, like 50% of the skills [are] lost,” she says. “Within a month, almost 100%. And that’s self-selected folks [who] are saying, ‘I want to learn how to understand people’ [but who end up being] very bad at applying those rules consistently.”

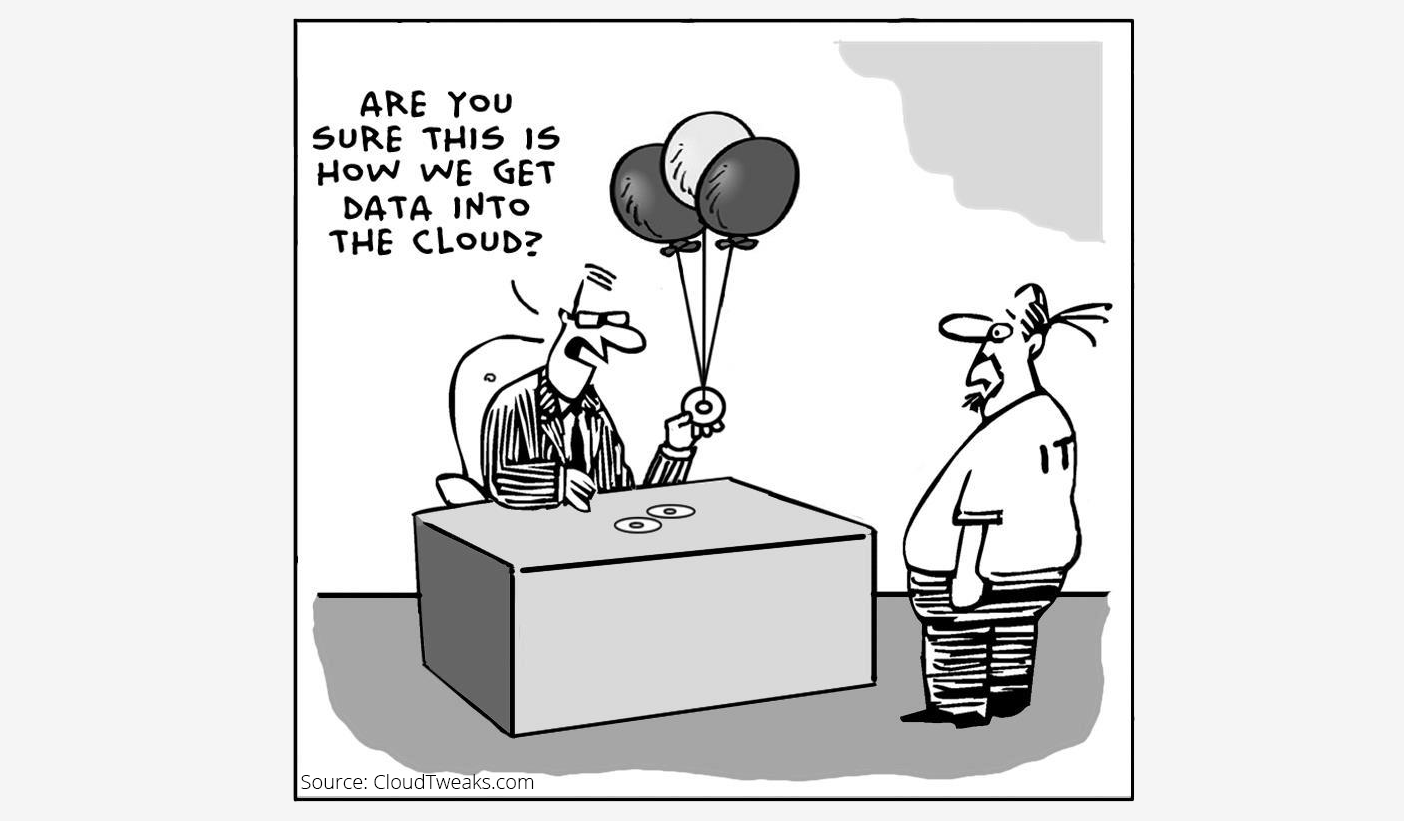

If therapists (”supposedly some of the most empathic people,” Lord points out) can’t be taught to practice empathy, then the odds don’t look that good for the rest of us. While AI is unlikely to teach empathy any better, mpathic’s creators believe it can help people augment their behavior by showing us the rules, and then helping us follow them thanks to what Jolley-Paige calls “the power of the pause.”

By saying “‘Hey, are you sure this is how you want to phrase this?’ it’s giving people a chance to pause and to take a step back,”Jolley-Paige says.

But knowing and following the rules is no replacement for actual human connection, something AI still can’t quite grasp. (And if it does, we have bigger things to worry about than rude text messages from a boss or colleague.)

“Even if you say the exact right thing in the right moment, it doesn’t mean that you’re going to be closer to your colleagues, or that you’re going to have a better relationship with your team,” Lord says. “Some of our prompts have to do with language, but some of them have to do with, ‘You need a connection in this moment, and that connection is not going to happen on a Slack or email.’”

.png/_jcr_content/renditions/hero_square%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/hero_square%20(3).webp)

.png/_jcr_content/renditions/blog%20(1).webp)

.png/_jcr_content/renditions/hero%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/1080x1080%20(1).webp)

.gif)

.png)

.png)

.png)

.jpg)

.jpg)