On March 2, 1959, a group of Australians gathered to celebrate a groundbreaking ceremony at Bennelong Point in central Sydney, unknowingly watching the start of one of the most disastrous construction projects in human history.

Joseph Cahill, Premier of the state, nervously hammered a commemorative plaque into the ground, marking the official start of construction of the Sydney Opera House. As soon as the plaque was in place, thousands of workmen descended on the site, turning the peaceful peninsula into a cacophony of noise.

Cahill had spent the previous five years championing the Opera House project and defending its enormous AUD $215 million price tag. But as the noise of the pneumatic jackhammers grew louder, Cahill felt all the stress fall from his shoulders. The project was underway and nothing could stop it now.

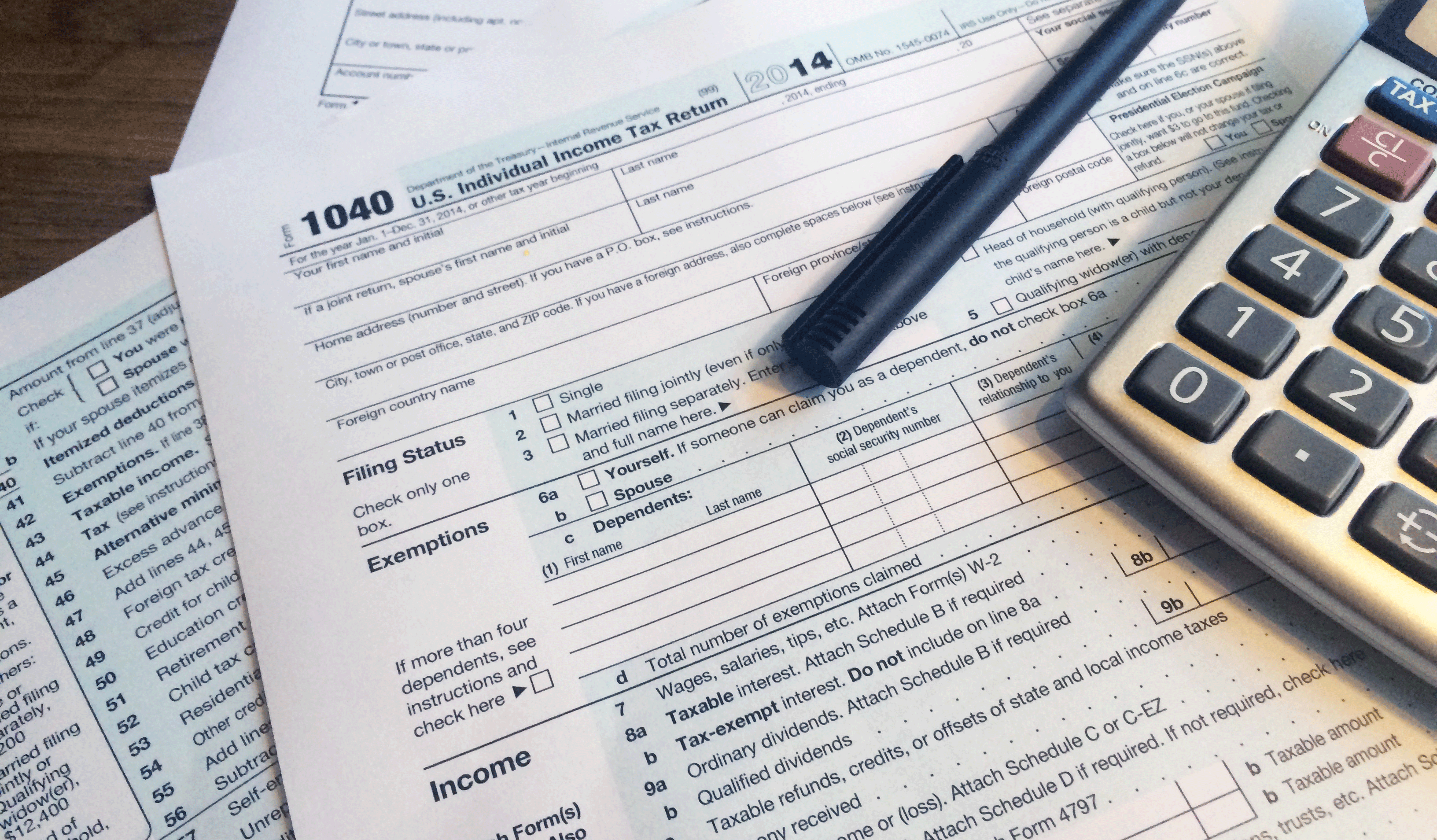

Cahill knew that large civil projects like the Opera House were always riddled with delays and overspends. He’d watched countless deadlines slip and dozens of budgets grow out of control in other projects. But the Opera House was different. He’d developed an ambitious timeline to assemble all 588 concrete pillars, 2,400 concrete ribs and 1,056,006 ceramic tile chevrons. He’d optimized every process and mitigated every risk. His project would be different.

But four years later, Cahill’s timeline was in tatters and the Opera House was an empty concrete skeleton.

The usual litany of problems had reared their ugly heads: modified requirements, compounding costs, changes in organizational priorities, poor estimates, cash flow issues, relationship breakdowns, the list goes on and on. In the end, the Sydney Opera House didn’t take four years to finish, it took 14. And it didn’t take AUS $215 million, it took AUS $3.1 billion—a 1,340% overrun.

Cahill’s failure is amazing because he was surrounded by evidence telling him that his project timeline was unachievable. But despite all the contradictory evidence, he still insisted that he could do it.

The interesting thing is that while Cahill’s mistakes are obvious in hindsight, they probably felt right at the time. That’s because his faulty thinking was driven by something called a cognitive bias, a systematic error in thinking that affects our decisions.

In 1979, two psychologists, Daniel Kahneman and Amos Tversky, put a specific label to this particular cognitive bias, dubbing it the planning fallacy. “The planning fallacy is that you make a plan, which is usually a best-case scenario,” wrote Kahneman in his paper Intuitive Prediction: Biases and Corrective Procedures. ”Then you assume that the outcome will follow your plan, even when you should know better.”

While Cahill’s calamitous project is one of the worst examples of poor planning, it’s far from the only example of the planning fallacy.

Once we understand how cognitive biases are contributing to our poor decision making, we can start mitigating those biases and improving our judgments.

Entrepreneurs and managers massively underestimate how much they will need to invest to bring their ideas to market and how long that will take. (As Guy Kawasaki quips: “As a rule of thumb, when I see a projection, I add one year to delivery time and multiply revenues by 0.1.”) Sales managers set overly ambitious sales quotas, causing over half of all sales teams to miss their targets. And meetings chronically overrun their wildly inaccurate time estimates. (Wasteful meetings are now cited at the leading cause of work disruption.) Life, it seems, is rife with egregious plans.

The planning fallacy—and other cognitive biases—can teach us a huge amount about how our minds work. And once we understand how cognitive biases are contributing to our poor decision making, we can start mitigating those biases and improving our judgments.

Insidious, unknown and disruptive

The planning fallacy was one of the first documented cognitive biases. However, not all cognitive biases result in multi-billion dollar overspends and decade-long overruns. Most biases are insidious, subtly influencing our every action.

We tend to remember only the good parts of our holidays (recall bias), ignore evidence that contradicts our beliefs (confirmation bias) and incorrectly judge probabilities based on how many examples sprint to mind (availability bias).

Left unchecked, these biases can lead to faulty decision making, derailing personal and organizational productivity.

Jessica Prater is an organizational psychology specialist who spent the bulk of her early career within a Global Fortune 500 company. Early on, Prater realized managers were drifting away from the company’s robust employee review process.

“Annual reviews were completed by managers and employees were evaluated on organizational competencies as well as yearly goals,” explained Prater. “But a manager tended to be drawn by what has happened most recently as it may be harder to remember the events from further back. Specifically, they may struggle to remember good performance when something bad has happened recently.”

Prater immediately recognized this as the recency bias, which is where people overvalue the importance of things that happened recently compared to events that happened further in the past.

The dominant economic theory of the twentieth century—Chicago School economics—was built on assumption that individuals were all rational economic people. But that might not be true.

“I recognized this was an issue because multiple times we saw increased team tension and blame game around performance review time,” said Prater. “There was also increased tension because of the fear of being left with the 'hot potato' of a failure that would cause recency bias with their review.”

The managers Prater worked with probably believed they were rational people. They believed they were fairly reviewing their employee’s performance based on the information available to them. People have believed such things about themselves for centuries. In fact, the dominant economic theory of the twentieth century—Chicago School economics—was built on assumption that individuals were all rational economic people. But that might not be true.

Rebuilding economics

In the early-1970s, economist Richard Thaler was struggling with his doctorate. He faced a hidebound discipline wedded to mathematical models and perfectly rational consumers. But Thaler’s work eschewed the assumption of rationality, instead arguing that economics should consider emotional bias and mental quirks like cognitive biases.

“We were worried that he might not get tenure,” explained fellow economist Daniel Kahneman. “Everybody knew that he was bright, that he was brilliant. But he wasn’t doing anything that they considered to be economic research. He wasn’t doing anything that was mathematical.”

Thaler stuck with his research, eventually completing his doctorate in 1974. He spent the late-1970s at Stanford University where he was exposed to Kahneman’s work on systematic bias. And working within Kahneman’s theoretical framework published a paper called ‘Toward a Positive Theory of Consumer Choice’ in 1980. Thaler’s paper took a hammer to the status quo, smashing the idea of the rational economic person to pieces and kickstarting a revolution into behavioural economics.

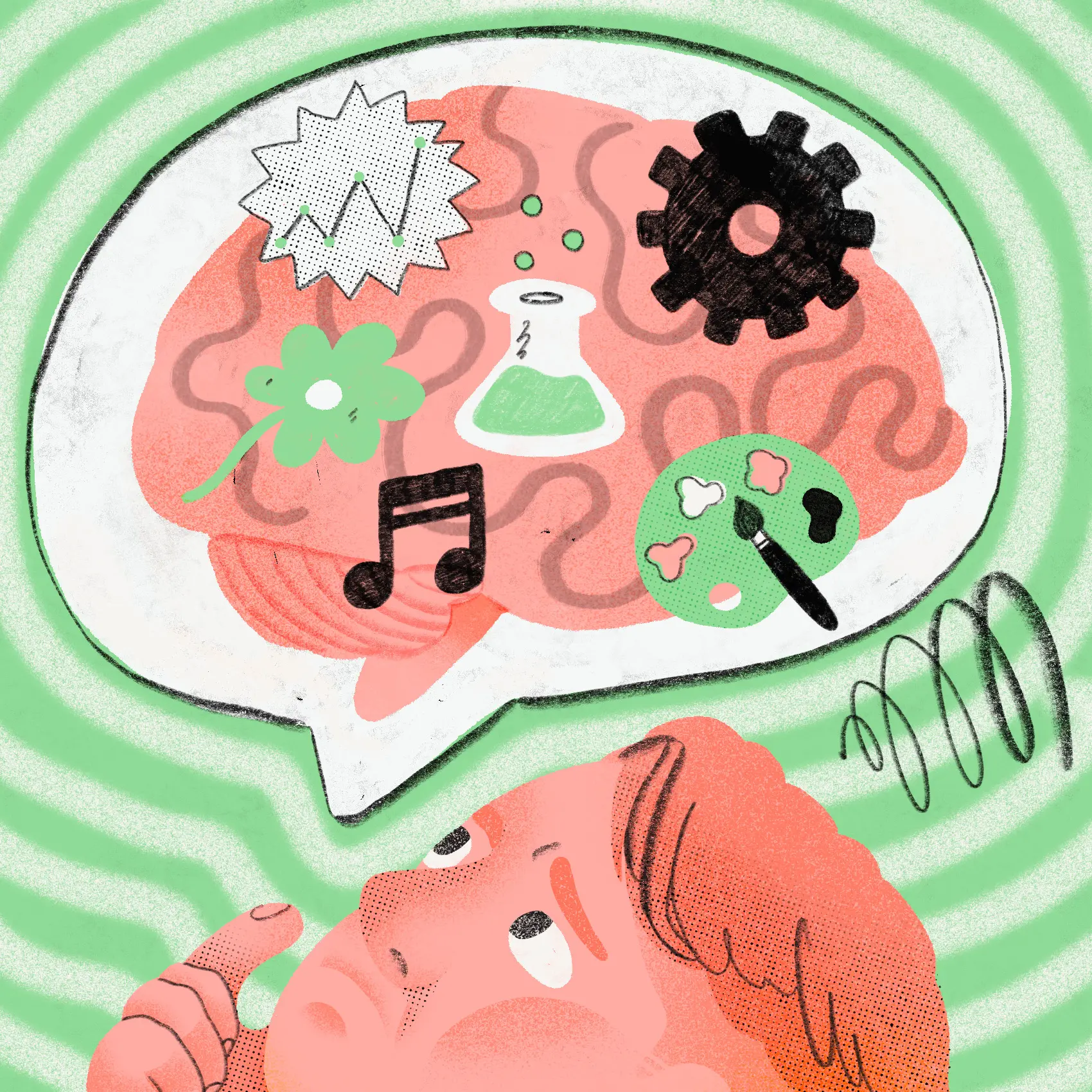

Over the next few decades, Thaler and his contemporaries would identify dozens of other cognitive biases that haunt our decision making. He discovered that people are inclined to make decisions based on how readily information is available to them. This is called the availability heuristic. People are also more likely to stick with the status quo despite the possibility of big gains from change. This is called status quo bias. And that we all believe we’re less likely to suffer from misfortune than attain success. This is called the optimism bias.

Did you make the right decision by trusting your gut? Or did your reptilian brain cling onto the first piece of information you gave it?

Writing for the Guardian, philosopher Galen Strawson summarized the cognitive bias revolution with a deeply uncomfortable idea: “We don't know who we are or what we're like, we don't know what we're really doing and we don't know why we're doing it.”

Understanding our reptilian brain

To understand how we make decisions, it helps to understand where our brains come from. The human brain—or parts of it—is astonishingly old. The oldest parts (the brainstem and the cerebellum) date back over 500 million years to a common vertebrate ancestor. Biologists call this region the reptilian area and its focus is simple: avoid pain and keep you alive.

Our brains have come a long way since then. We developed the limbic system, which regulates emotion, behaviour, motivation, memory and olfaction. Then came the neocortex, which controls perception, cognition, spatial reasoning and language. According to Daniel Kahneman in Thinking, Fast and Slow, this multi-layer brain development has created two distinct ways of thinking, which he calls System 1 and System 2.

System 1 thought is dominated by your old reptilian area with some assistance from the limbic system. It operates automatically and quickly, with little or no effort and no sense of voluntary control. When someone steps out in front of your car and you instinctively swerve to avoid them, that’s your System 1 thought taking control.

System 2 thinking comes from your evolutionarily younger neocortex. It allocates attention to complicated mental activities like agency, choice and concentration. When you carefully compare the benefits of two competing job offers, that’s System 2 in full swing.

Both systems of thought are necessary and valuable but they have their limitations. For example, when you rely on the complex System 2 to make reactionary decisions, you might hit the oncoming car while deciding which way to swerve. When your rely on reactionary System 1 thinking to make a complex decisions, your brain takes shortcuts and you start experiencing all sorts of cognitive bias.

An introduction to debiasing

Imagine you are reviewing internal applicants for a promotion. The first applicant—let’s call her Audrey—looks good on paper so you take a look at her performance reviews. The first review you pick up is less than complimentary and questions her work ethic and talent. The rest of her reviews are much more positive, praising her contributions, skills and attention to detail. But that first review sticks in your mind. It’s given you a bad feeling and you always trust your gut. You toss Audrey’s resume in the rejected pile and move onto the next application.

Did you make the right decision by trusting your gut? Or did your reptilian brain cling onto the first piece of information you gave it?

Identifying bias—for example, the anchoring bias above—is only the first step in debiasing your decision making. Just being aware of your bias triggers some short-term mitigation, but the impact of that knowledge fades over time. Real debiasing needs structural change and for that you’ve got two options: modify the decision maker or change the environment.

The first way to improve your decision making is to modify the decision maker. According to researchers Soll, Milkman and Payne, modifying the decision maker means teaching people the “appropriate rules and principles, to bridge the mindware gap.” Practically, this means equipping people with the knowledge and tools they need to mitigate the effect of biases.

For example, researchers Koriat, Lichtenstein, and Fischoff recommend decision makers set aside time to purposefully consider reasons why their reasoning might be wrong. This technique helps decision makers to stop focusing too narrowly on supporting evidence and seek out contradictory information.

But the effects of education are often domain-specific and tend to fade over time. Meteorologists who have extensive training in probability can predict rainfall with astonishing success but are as poor as anyone else at predicting the stock market.

Your other option, according to Soll, Milkman and Payne is “to change the environment in which a decision will be made in ways that are likely to reduce the incidence of error.”

For example, public health researcher Atul Gawande highlights pilots’ use of checklists to mitigate biases stemming from memory failure. By modifying a pilot’s decision-making environment, we can induce reflection and ensure they consider each step in a process. And that can mean the difference between a successful emergency landing and a tragic crash.

How to outsmart your biases

By either modifying the decision maker or changing your environment, you can mitigate many common cognitive biases and improve your decision making. Below we’ll look at several effective debiasing techniques for common cognitive biases.

Confirmation bias

According to Peter Lazaroff, “Investors spend most of their time looking for strategies that 'work' or evidence that supports their existing investment philosophy.” Lazaroff asks us to imagine getting an investment tip from a friend. While researching the tip, we find all sorts of positives while glossing over the negatives because we’re trying to "confirm" the return potential of the investment.

This is an example of the confirmation bias as we’re ignoring contradictory evidence and fixating on the nuggets of evidence that support our tip.

In order to mitigate the confirmation bias, Kahneman recommends assuming that your hypothesis is wrong and searching for an explanation why. In Lazaroff’s example, we would assume the tip our friend’s tip is bad and actively look for red flags. Forcing people to consider that their assumption is faulty makes them far more willing to accept and internalize contradictory information, which reduces the effect of the confirmation bias.

Overconfidence

Between 2003 and 2013, researchers at Duke University asked thousands of chief financial officers (CFOs) to produce one-year forecasts for the S&P 500. All in, they collected more than 13,300 forecasts. At the end, the researchers compared the forecasts to the actual S&P 500 performance and discovered something astonishing: CFOs had no clue about the short-term future of the stock market. In fact, the correlation between their financial forecasts and actual performance was slightly less than zero.

Despite the study’s findings, CFOs remained confident in their abilities to produce positive returns for their shareholders. This is an example of the overconfidence bias where a person’s subjective confidence in their judgments is greater than the observed accuracy.

In order to mitigate the effects of the overconfidence bias, Gary Klein recommends a process called a premortem. “Unlike a typical critiquing session, in which project team members are asked what might go wrong, the premortem operates on the assumption that the 'patient' has died, and so asks what did go wrong,” explains Klein in an article for Harvard Business Review.

Although teams and individuals often discuss risk analysis before beginning a project, few techniques offer the same benefits of the premortem. Premortems provide prospective hindsight, encouraging team members to unleash their creativity in a purposefully pessimistic way.

What you see is all there is

According to a study of 168 business decisions by Chip and Dan Heath, only 29% of business leaders considered more than one option. The rest fell in love with their first idea and ran with it.

This is an example of something Kahneman calls the "what you see is all there is" (WYSIATI) effect. Essentially, our brains look at the limited information immediately available to us and assume that’s all the information available, period.

To counteract the WYSIATI, decision experts Chip Heath and Dan Heath propose a simple mental experiment: assume your current options are unavailable and ask, “What else could I do?”

Chip and Dan Heath ask us to imagine a company deciding whether or not to expand into Brazil. Using their debiasing technique, the business assumes that expanding into Brazil isn’t an option, prompting them to consider options. Perhaps they could expand into a different country or improve existing locations or even launch an online store.

Present bias

While everyone agrees that saving for retirement is important, few people actually sign up for a retirement plan. People actually spend more time researching new TVs than they do selecting a retirement plan. This is called the present bias, a tendency to overvalue payoffs closer to the present at the expense of those further in the future.

In his book Nudge, Thaler recommends using nudges to mitigate present bias. A nudge is any environmental change that influences behaviour without restricting choice. One example Thaler proposed—and which has been implemented across the world—is default nudges for retirement savings. Instead of requiring employees to opt in to a retirement scheme, Thaler recommends they are automatically enrolled when they enter employment.

After the UK Government implemented Thaler’s retirement saving nudge in 2012, membership of retirement schemes jumped from 2.7 million to 7.7 million in just five years. That’s five million people who are now actively saving for their retirement and they owe it to a subtle nudge.

Debiasing techniques in action

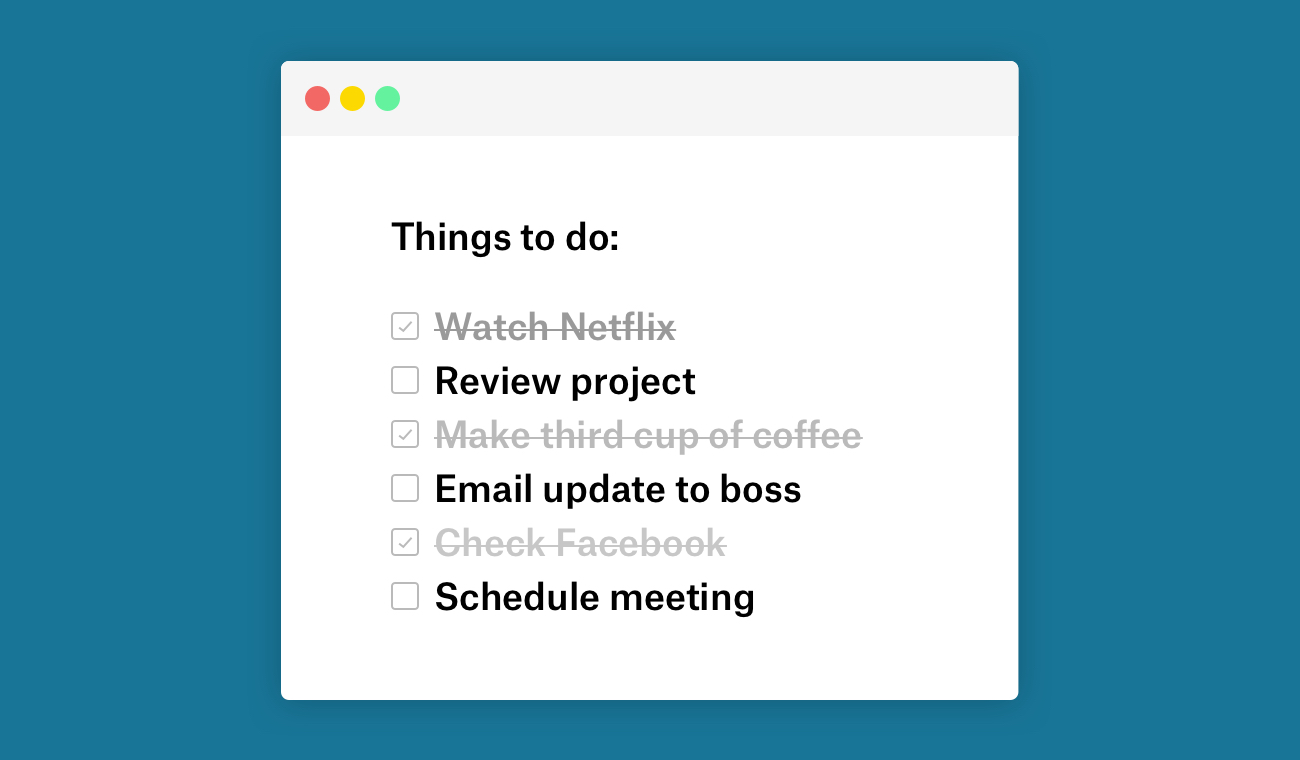

Earlier this year, marketing coordinator Olivia Ravn was tasked with producing an eBook for a new client. In the project initiation meeting, a stakeholder asked her how quickly her team would be able to create the eBook. She assured the stakeholder that everything would be produced, packaged and delivered within four weeks.

After the meeting, Ravn reflected on her answer. She had felt pressured to please the client and had selected the four-week figure because it had felt roughly right at the time. But she wanted to be sure so she went back and reviewed the previous three comparable projects she had led. From start to finish, they had taken between six and eight weeks to deliver, considerably more than the four weeks she had promised.

Without realising it, Ravn had just implemented one of the most effective debiasing techniques for the planning fallacy—the outside view, first conceptualised by Kahneman in 1993. With the outside view, you base your forecasts on an analysis of similar projects. If it always take a team between six and eight weeks to produce an eBook, that’s probably how long it will take you.

Ravn went back to her client and admitted that her initial timeline was off. She presented worklogs from her previous projects and explained that a six- or eight-week timeline was more realistic. Ravn admits that the admission felt slightly embarrassing but believes it saved her from a much more serious discussion with her client down the road.

It’s interesting to imagine what would happen if Ravn had been in Joseph Cahill’s place back in 1959. Would she have investigated similar projects and discovered the stark disparity between the original Sydney Opera House timeline and the commercial reality of similar projects? Would she have amended the plan? Or would the key stakeholders have scrapped the project altogether? It’s difficult (or impossible) to say, after all, it’s a counterfactual.

What’s more interesting is to recognize that we’re all still repeating Cahill’s mistakes to this day. Product managers still set launch dates based on perfect project conditions, journalists still promise first drafts assuming words will flow effortlessly from their fingers and creative directors still agree to rebranding schedules confident that their client will love their first concept.

More than anything, though, it’s important to realize that if we want to do truly great work and produce truly great things, it starts with identifying and then eradicating our cognitive biases. By addressing cognitive biases we can make more effective decisions and improve our performance in the workplace and our personal lives.

.png/_jcr_content/renditions/hero_square%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/hero_square%20(3).webp)

.png/_jcr_content/renditions/blog%20(1).webp)

.png/_jcr_content/renditions/hero%20(1).webp)

.png/_jcr_content/renditions/hero_wide%20(1).webp)

.png/_jcr_content/renditions/1080x1080%20(1).webp)

.gif)

.png)

.png)

.png)

.jpg)

.jpg)